Two of the most popular Python-based deep learning libraries are PyTorch and TensorFlow. It may be difficult for a novice machine learning practitioner to decide which one to use when working with a deep learning model. You may be completely unaware of the distinctions, making it impossible for you to make an informed decision. We will look at some of those differences in practice in this article by creating a classifier by using both frameworks for the same problem-solving. Finally, we will conclude how the similar models defined to address the same problem but using different infrastructure defer in results. The major points to be covered in this article are listed below.

Table of contents

- About MNIST digit data

- Brief about TensorFlow

- Brief about PyTorch

- Building deep learning model for image classification

- Model building with TensorFlow

- Model building with PyTorch

- Comparing the performances

Let’s first discuss the MNIST dataset.

About MNIST digit data

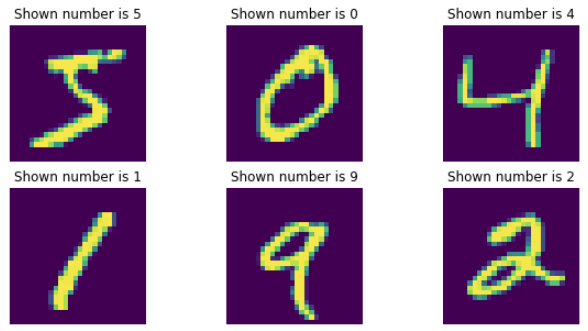

The Modified National Institute of Standards and Technology dataset is an acronym for the MNIST dataset. It’s a collection of 60,000 small square grayscale images of handwritten single digits ranging from 0 to 9. The goal is to sort a handwritten digit image into one of ten classes that represent integer values ranging from 0 to 9, inclusively.

It is a widely used and well-understood dataset that has been solved for the most part. Deep learning convolutional neural networks are the best-performing models, with a classification accuracy of over 99 percent and an error rate of between 0.4 percent and 0.2 percent on the hold-out test dataset. The examples below show is from the training dataset of MNIST digits loaded from Tensorflow dataset API.

Brief about TensorFlow

Google developed TensorFlow, which was made open source in 2015. It evolved from Google’s in-house machine learning software, which was refactored and optimized for production use.

The term “TensorFlow” refers to the way data is organized and processed. A tensor is the most basic data structure in both TensorFlow and PyTorch. When you use TensorFlow, you build a stateful dataflow graph, which is similar to a flowchart that remembers past events, to perform operations on the data in these tensors.

TensorFlow is known for being a high-performance deep learning library. It has a large and active user base, as well as a plethora of official and third-party training, deployment, and serving models, tools and platforms.

Brief about PyTorch

PyTorch is one of the most recent deep learning frameworks, developed by the Facebook team and released on GitHub in 2017. PyTorch is gaining popularity due to its ease of use, simplicity, dynamic computational graph, and efficient memory usage. It is imperative, which means it runs immediately, and the user can test it to see if it works before writing the entire code.

We can write a section of code and run it in real-time because it has a built-in Python implementation to provide compatibility as a deep learning platform. It quickly gained popularity due to its user-friendly interface, prompting the Tensorflow team to incorporate its most popular features into Tensorflow 2.0.

Building deep learning model for image classification

Here in this section, we are going to compare the code usability and ease to use of TensorFlow and PyTorch on the most widely used MNIST dataset to classify handwritten digits. Using both the frameworks we’ll check minimal procedures to be carried out in order to have a proper classification model. In both the model steps to be taken are, data loading, preprocessing, model building, training, and visualizing the result. For both models, I tried to keep layers and hyperparameter configurations the same as each other.

So now let’s start first with Tensorflow.

Model building with TensorFlow

Let’s build a convolutional neural network model for image classification in TensorFlow.

import tensorflow from tensorflow.keras.datasets import mnist from tensorflow.keras.utils import to_categorical from tensorflow.keras.layers import Conv2D, Flatten, Dense, MaxPooling2D from tensorflow.keras.models import Sequential import matplotlib.pyplot as plt

Load and pre-process the data. Here preprocessing is nothing but reshaping the images from 28 x 28 to 28 x 28 x 1 i,e we have added colour channel and 1 signifies the grey channel. Next, we have made a binary representation of each class and lastly, we have scaled all the pixel values.

# reshaping and one hot encoding

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1)

x_test = x_test.reshape(x_test.shape[0], 28, 28, 1)

y_train = to_categorical(y_train)

y_test = to_categorical(y_test) # scaling

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train = x_train / 255.0

x_test = x_test / 255.0

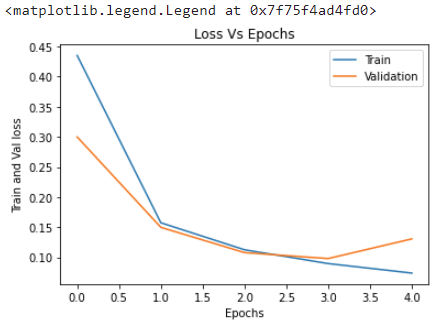

Next, we’ll build a model. This model will consist of 2 convolutional layers followed by a pooling layer and a Dense classifier. Stochastic gradient descent is used as an optimizer function with a learning rate of 0.01 and categorical cross-entropy as loss function, the model is trained for 5 epochs. And this is also maintained in the Pytorch model.

model = Sequential() model.add(Conv2D(32, (3,3), input_shape = (28,28,1), activation='relu')) model.add(Conv2D(64,(3,3), activation='relu')) model.add(MaxPooling2D((2,2))) model.add(Flatten()) model.add(Dense(1024, activation='relu')) model.add(Dense(10, activation='softmax')) # compile model.compile(optimizer='sgd', loss='categorical_crossentropy', metrics=['accuracy']) # training history = model.fit(x_train, y_train, validation_split=0.3, epochs=5)

Below shown are train and validation losses for 5 epochs.

Here up to this, this is all minimal work required to build an image classifier using TensorFlow.

Model building with PyTorch

Let’s build a convolutional neural network model for image classification in PyTorch.

import torch import torch.nn as nn import torch.optim as optim import torch.nn.functional as F from torchvision import datasets, transforms

Load and pre-process the data.

# pre-processor transform = transforms.Compose([ transforms.Resize((8, 8)), transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))]) # load the data train_dataset = datasets.MNIST( 'data', train=True, download=True, transform=transform) test_dataset = datasets.MNIST( 'data', train=False, download=True, transform=transform) train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=512) test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=512)

Next, same as before we’ll here build a model, and compile it.

# Build a model class CNNModel(nn.Module): def __init__(self): super(CNNModel, self).__init__() self.conv1 = nn.Conv2d(1, 32, 3, 1) self.conv2 = nn.Conv2d(32, 64, 3, 1) self.fc = nn.Linear(1024, 10) def forward(self, x): x = F.relu(self.conv1(x)) x = F.relu(self.conv2(x)) x = F.max_pool2d(x, 1) x = torch.flatten(x, 1) x = self.fc(x) output = F.log_softmax(x, dim=1) return output net = CNNModel() # compiling optimizer = optim.SGD(net.parameters(), lr=0.01) criterion = nn.CrossEntropyLoss()

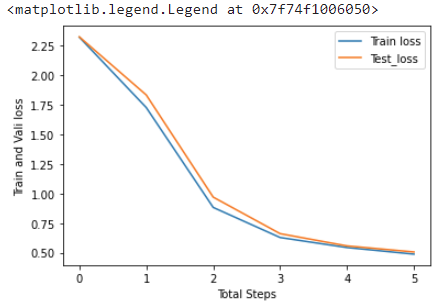

After this, During the training phase, we must create a loop that loops over our epochs and batches. We’ll process our images accordingly and use the forward pass to calculate our loss. This code can be found in the notebook linked below.

Now finally we’ll take a look at the loss curve.

Comparing the performances

From the above two graphs, the curve from the TensorFlow model looks steep and after the 3rd epoch, the loss on the validation set seems to be increasing. In short, we can say that the learning procedure in the TensorFlow model is of steep nature and we can expect many tradeoffs.

Whereas in the PyTorch model, even the model building procedure looks complex, the training and loss observed to be smooth through the procedure and the validation loss properly followed the test loss.

Final words

At this point, we have briefly discussed TensorFlow and PyTorch and seen the minimal modelling procedure for image classification. In context to training and test performance from the two respective graphs, we can say the training and evaluation process is more smooth in the PyTorch model, and in terms of building blocks, I would say TensorFlow is more beginner-friendly due to its simplified API.

You must be logged in to post a comment.