Lighting is often an overlooked aspect of attraction design. Look up while riding Spaceship Earth and you’ll find numerous theater-style lighting fixtures. Consider how the Sistine Chapel scene lighting differs from the computer lab. Lighting designers illuminate the scenes much like a television production or stage show. A new Disney invention could eliminate traditional lighting, moving lighting effects to an augmented reality viewer.

The “Introducing Real-time Lighting Effects to Illuminate Real-world Physical Objects in See-through Augmented Reality Displays” patent application (US 2021/0097757 A1) published April 1, 2021 sheds some light on the invention. A shader and a light source generator are used to apply virtual illumination to physical objects viewed through an augmented reality (AR) display.

An example method generally corresponds to a three-dimensional geometry of an environment in which the augmented reality display is operating, and the shader generally comprises a plurality of vertices forming a plurality of polygons. A computer generated lighting source is introduced into the augmented reality display. One or more polygons of the shader are illuminated based on the computer-generated lighting source, thereby illuminating one or more real-world objects in the environment with direct lighting from the computer-generated lighting source and reflected and refracted lighting from surfaces in the environment.

Patent Application US 2021/0097757 A1

The application’s abstract is rather dense. Let’s simplify it a bit. This system is able to map three dimensional objects in the real world. The shader identifies points on the object; those are the vertices. Those vertices are then connected to create numerous polygons, mapping the real-world object for the computer.

A computer-generated lighting source is then applied to the polygons identified by the shader. The rendered scene lighting is displayed on top of the real-world objects through the AR screen.

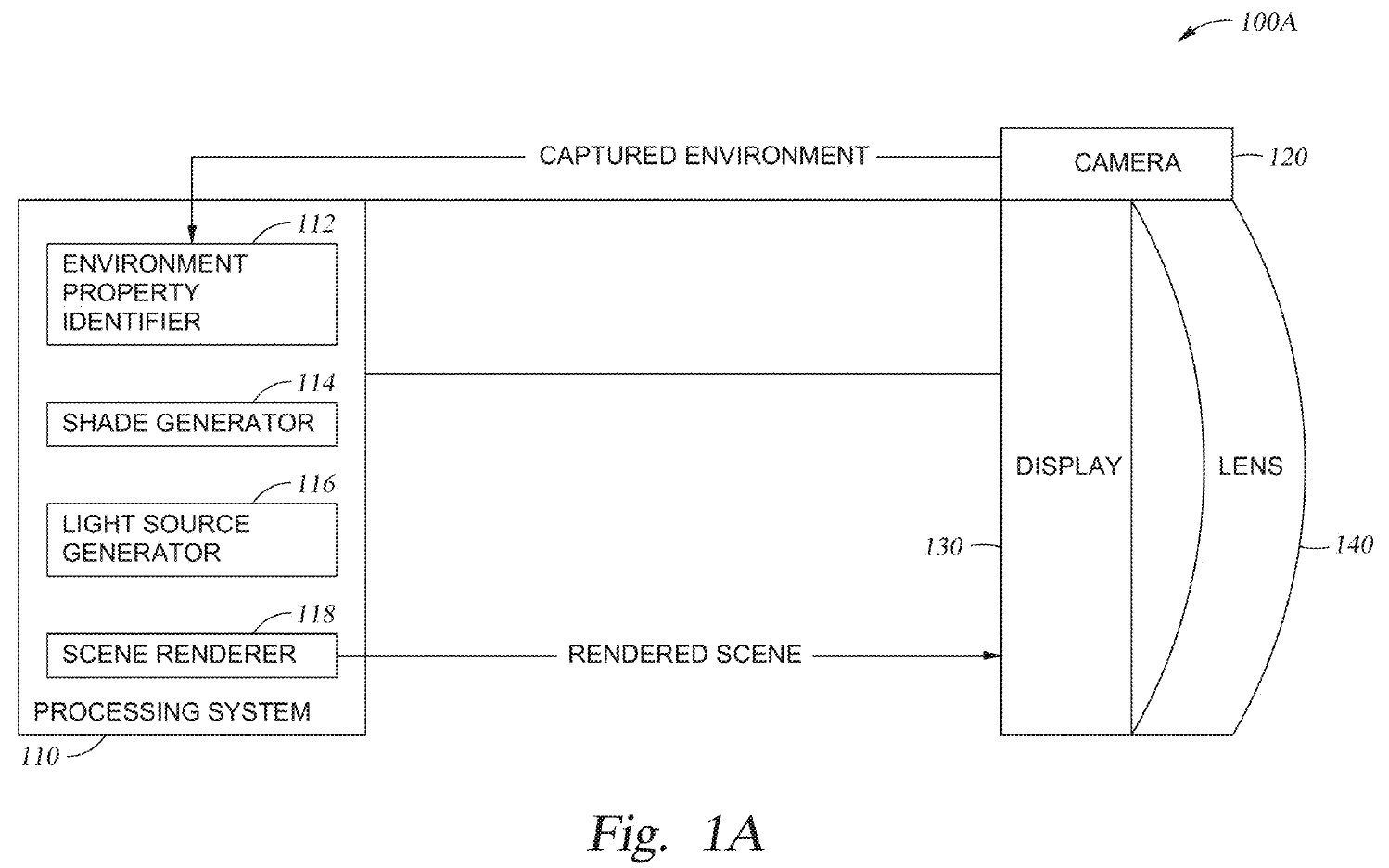

Fig. 1A provides an overview of one implementation of the system. The user wears an AR viewer (100A). A camera (120) captures the real-world environment, sending the captured information to the processing system (110). The processing system analyzes the captured environment (112), shades the objects by creating polygons (114), generates virtual lighting (116), then renders the scene (118). The rendered lighting scene is displayed over the see-through lens (140). The user sees virtual lighting effects applied to the real-world objects.

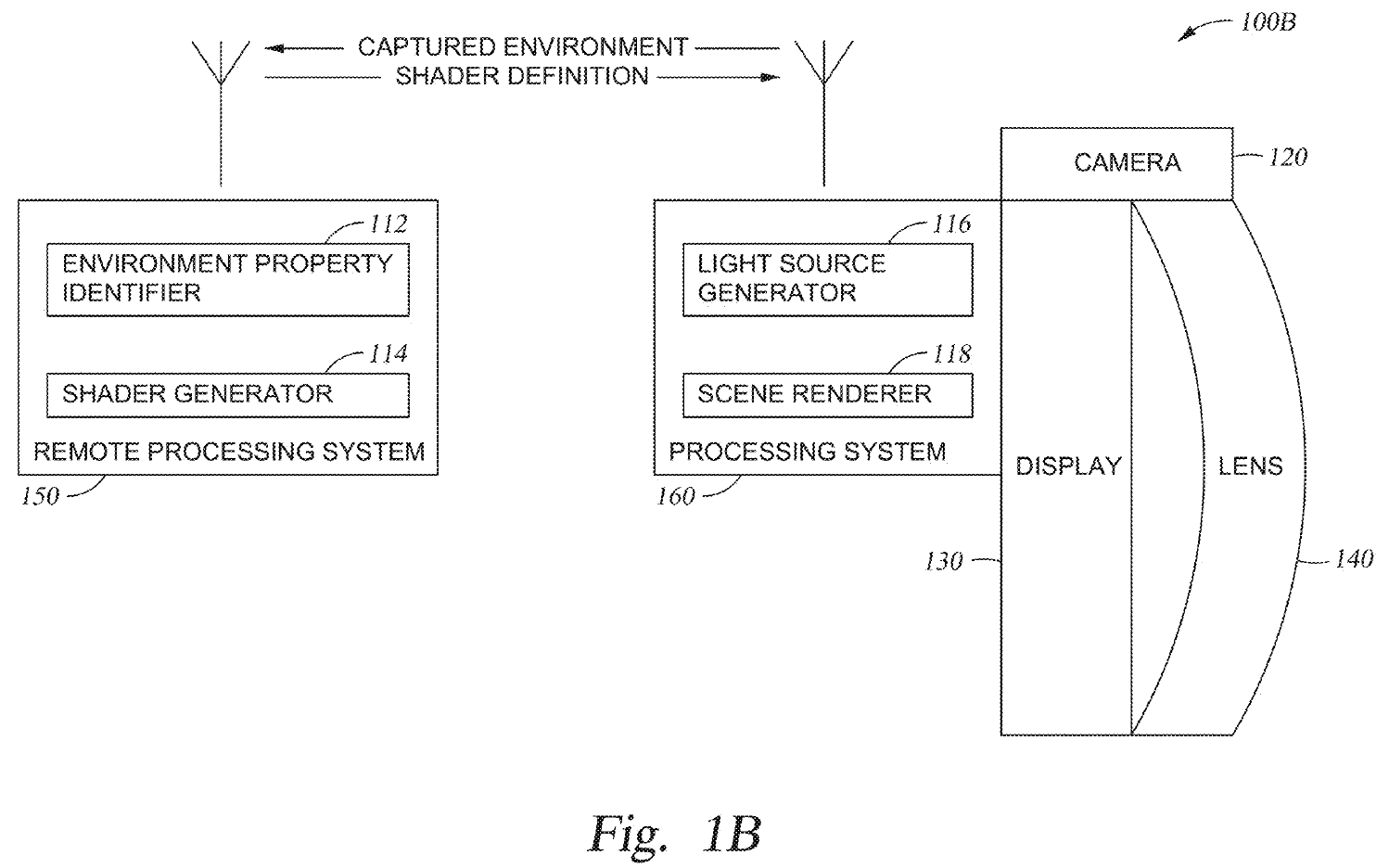

A second version of the system (Fig. 1B) offloads some processing to a networked computer. In this version, the environment identification (112) and shading (114) is handled remotely. A processing system (160) in the AR viewer then generates the lighting effects (116) and renders the augmented scene (118) to the display (130). This setup would allow for smaller, more affordable AR viewers.

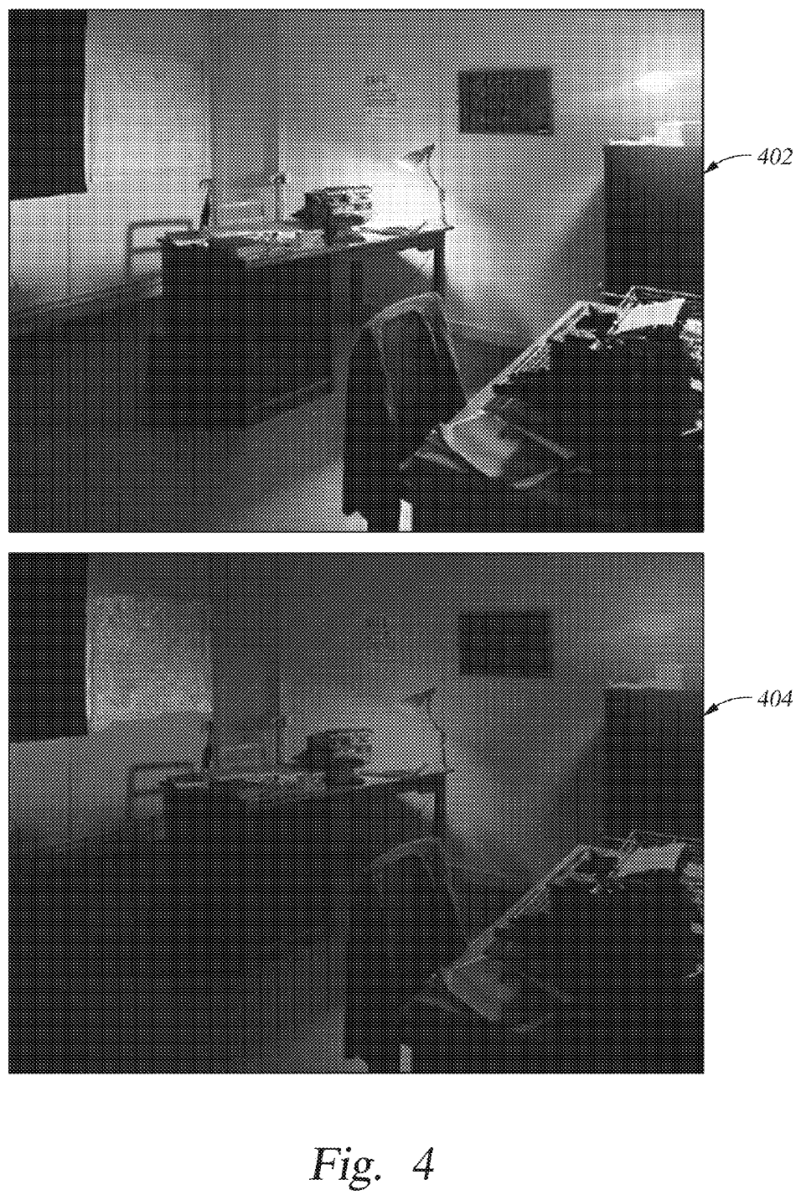

Fig. 4 shows the same real-world office scene. The top image (402) does not include the virtual lighting effects. With the rendered lighting effects, the office lighting becomes starker (404). This is the only example provided in the patent application.

This invention could change the way attractions are lighted. While it likely wouldn’t eliminate traditional lighting, it would introduce many new lighting possibilities.

This is just one of numerous Disney patents WDWNT has recently covered, including:

Will augmented reality enhance current and future Disney attractions? Or, are practical effects and lighting the only way to go? Be sure to share your thoughts in the comments.

You must be logged in to post a comment.