Recently, a team of researchers from DeepMind, Google Brain and the University of Toronto unveiled a new reinforcement learning agent known as DreamerV2. This reinforcement learning agent learns behaviours purely from the predictions in the compact latent space of a powerful world model. According to the researchers, DreamerV2 is the first agent to achieve human-level performance on the Atari benchmark.

From driverless cars to beating Go world champions, reinforcement learning has come a long way. The researchers said, to successfully operate in unknown environments, reinforcement learning agents need to learn about their environments over time and World models are an explicit way to represent an agent’s knowledge about its environment.

The Motivation

World models have the ability to learn from fewer interactions, enable forward-looking exploration, facilitate generalisation from offline data as well as allow reusing knowledge across multiple tasks. Compared to model-free reinforcement learning that learns through trial and error, world models facilitate generalisation and can predict the outcomes of potential actions to enable planning.

However, despite their intriguing properties, world models have so far not been accurate enough to compete with the state-of-the-art model-free algorithms on the most competitive benchmarks. To mitigate such challenges and to achieve human-level performance on reinforcement learning environments, the researchers created DreamerV2.

Genesis

DreamerV2 is the first-ever reinforcement learning agent based on a world model. The agent achieves human-level performance on the popular Atari benchmark. The agent basically constitutes the second generation of the previous Dreamer agent that learns behaviors purely within the latent space of a world model trained from pixels.

Developed by the same team last year, the Dreamer agent is a reinforcement learning agent that solves long-horizon tasks from images purely by latent imagination. More specifically, Dreamer learns a world model from the past experience and efficiently learns far-sighted behaviours in its latent space by backpropagating value estimates back through imagined trajectories. DreamerV2 is the successor of the Dreamer agent.

The DreamerV2 agent relies exclusively on general information from the images and accurately predicts future task rewards even when its representations were not influenced by those rewards.

The Tech Behind

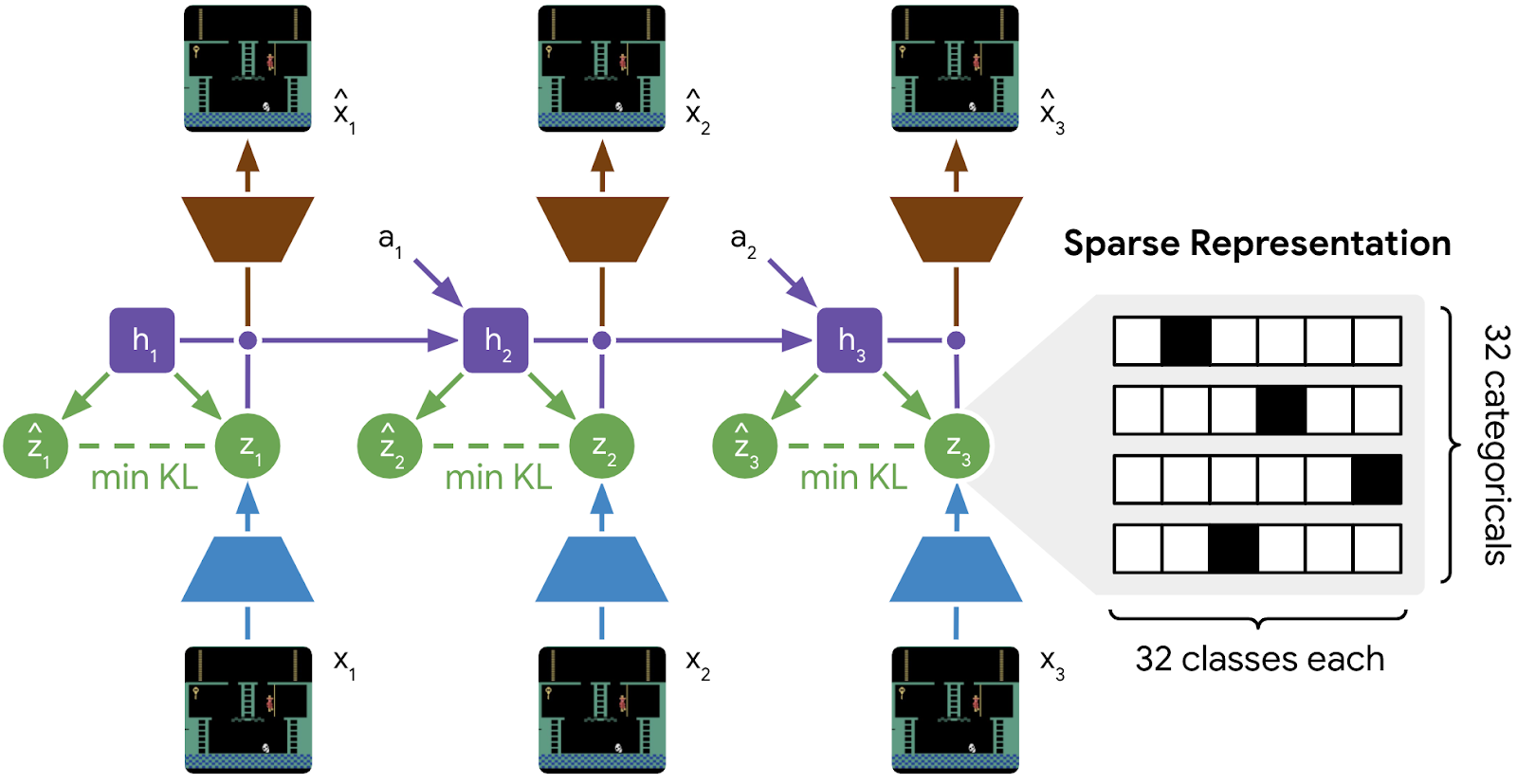

This new agent works by learning a world model and uses it to train actor-critic behaviors purely from predicted trajectories. It is built upon the Recurrent State-Space Model (RSSM) — a latent dynamics model with both deterministic and stochastic components — allowing to predict a variety of possible futures as needed for robust planning, while remembering information over many time steps. The RSSM uses a Gated Recurrent Unit (GRU) to compute the deterministic recurrent states.

Download our Mobile App

DreamerV2 introduced two new techniques to RSSM. According to the researchers, these two techniques lead to a substantially more accurate world model for learning successful policies:

- The first technique is to represent each image with multiple categorical variables instead of the Gaussian variables used by world models.

- The second new technique is KL balancing. This technique lets the predictions move faster toward the representations than vice versa.

Wrapping Up

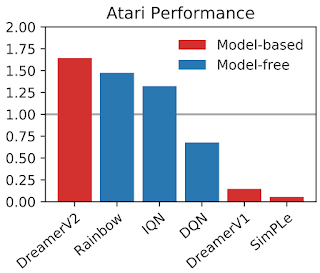

The above image shows how DreamerV2 outperformed previous world models. The researchers showed how to learn a powerful world model to achieve human-level performance on the competitive Atari benchmark.

DreamerV2 is the first world model that enables learning successful behaviors with human-level performance on the well-established and competitive Atari benchmark. Besides this, DreamerV2 out-performed top model-free algorithms with the same compute and sample budget using just a single GPU.

Read the paper here.

#wpdevar_comment_1 span,#wpdevar_comment_1 iframe{width:100% !important;}

Subscribe to our Newsletter

Get the latest updates and relevant offers by sharing your email.