<!– –>

Gamasutra is part of the Informa Tech Division of Informa PLC

This site is operated by a business or businesses owned by Informa PLC and all copyright resides with them. Informa PLC’s registered office is 5 Howick Place, London SW1P 1WG. Registered in England and Wales. Number 8860726.

The thoughts and opinions expressed are those of the writer and not Gamasutra or its parent company.

Welcome back!

In our previous article, we described the process of implementing raytraced shadows into our game engine – The Schmetterling 2.0, and our latest game – The Riftbreaker. We spoke about our approach, the problems we faced, and the solutions we found. We highly recommend reading it before diving into this one. Raytraced shadows is not the only DirectX 12 Ultimate technique that we adopted, however. Today we are going to talk about ambient occlusion, and how we switched from the horizon-based ambient occlusion (HBAO) technique to raytraced ambient occlusion (RTAO).

[embedded content]

Ambient occlusion is a common graphics feature of modern video games. It is a technique that calculates how much the pixels on the scene are exposed to light and applies additional shading based on the surroundings of the shaded surface. It is an approximation of a much more complex technique – global illumination (GI). Global illumination can apply additional ambient lighting to indirectly lit surfaces using various methods, one of which can be tracing the rays of diffused light. As the rays bounce around the scene, they create a detailed lighting model that adds a lot of realism and visual fidelity. Raytraced ambient occlusion tries to replicate this effect, but instead of following individual light rays and determining which surfaces they are going to hit, it estimates how many rays could potentially reach a surface based on the geometry of its immediate surroundings.

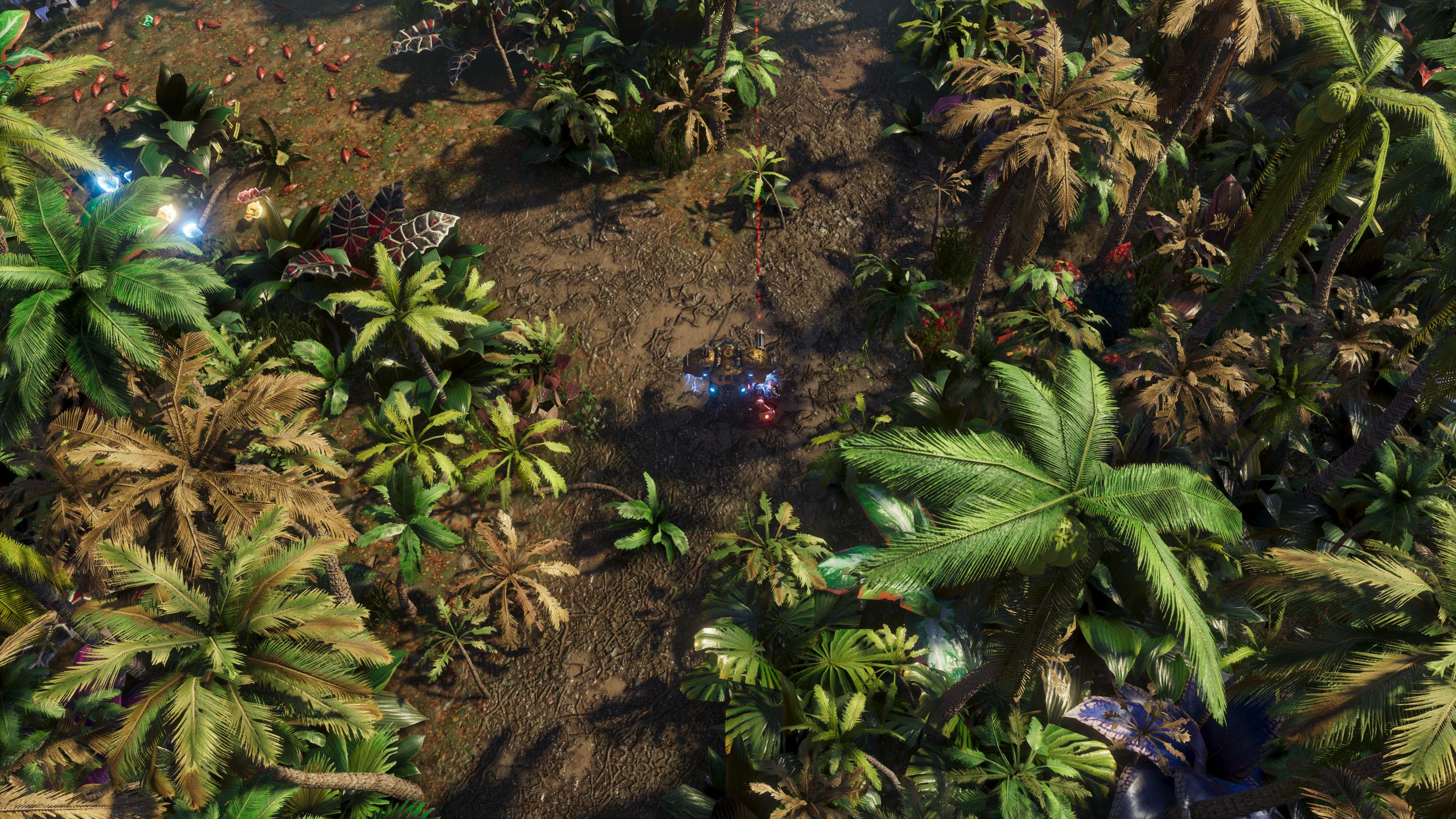

Each GIF in this article compares the results of using Horizon-Based Ambient Occlusion and Ray-Traced Ambient Occlusion in The Riftbreaker. The scenes are presented in both the regular version, as well as the debug one, showing exactly what the AO shader adds to the scene. Click the GIF for a hi-res YouTube video.

Classic ambient occlusion/global illumination algorithms have been in use for decades now. However, the high computational cost limited their usage only to offline rendering solutions – for example in movie studios. In order to emulate the effects of ambient occlusion, game engines started using techniques that utilized only the information available within screen space, mainly the values of depth buffer and normal buffer for individual pixels. Based on that information it is possible to approximate the level of occlusion for most pixels. This solution is far from perfect because the screen space data is incomplete – for example, it is impossible to apply this technique properly to the edges of the screen, because we do not have the knowledge of the ambience beyond the screen. AO algorithms that do not utilize raytracing calculate their results based on the screen space depth differences on the scene, instead of its actual geometry. With real-time raytracing capable hardware, we can finally implement a much more accurate representation of ambient occlusion in the game world.

RTAO is quite a subtle effect, but it still makes the image much more life-like. Click the GIF for a hi-res YouTube video.

Raytraced ambient occlusion varies significantly from the raytraced shadows that we described in our previous article. In that case, the main point of the algorithm was to trace a ray from an object to the light source in order to determine whether it is lit or not. For ambient occlusion, however, lighting does not matter at all. In raytraced ambient occlusion each surface checks for objects in the vicinity that could occlude it. Raytracing is a perfect solution to do so since all information about the scene is available at all times. By shooting rays from the surface in many directions we can determine which surfaces are occluded or not with much more precision.

RTAO can create an accurate occlusion map regardless of the scene geometry. Notice the difference in between dense building clusters or in the open hangar bay door. Click the GIF for a hi-res YouTube video.

In a perfect scenario, we could shoot an incredibly large number of rays in all directions from each surface. This would result in a pixel-perfect representation of the ambience. The hardware available to us at present does not offer as much power as would be necessary for that, so we shoot only a limited number of rays per pixel per frame in random directions. In order to achieve good results with such an approach, we need to make use of temporal denoising techniques. Just like in the case of our implementation of raytraced shadows we need to make do with only one ray per surface per frame. That gives us incomplete data, but by utilizing a denoiser we can reconstruct the image with a high dose of accuracy.

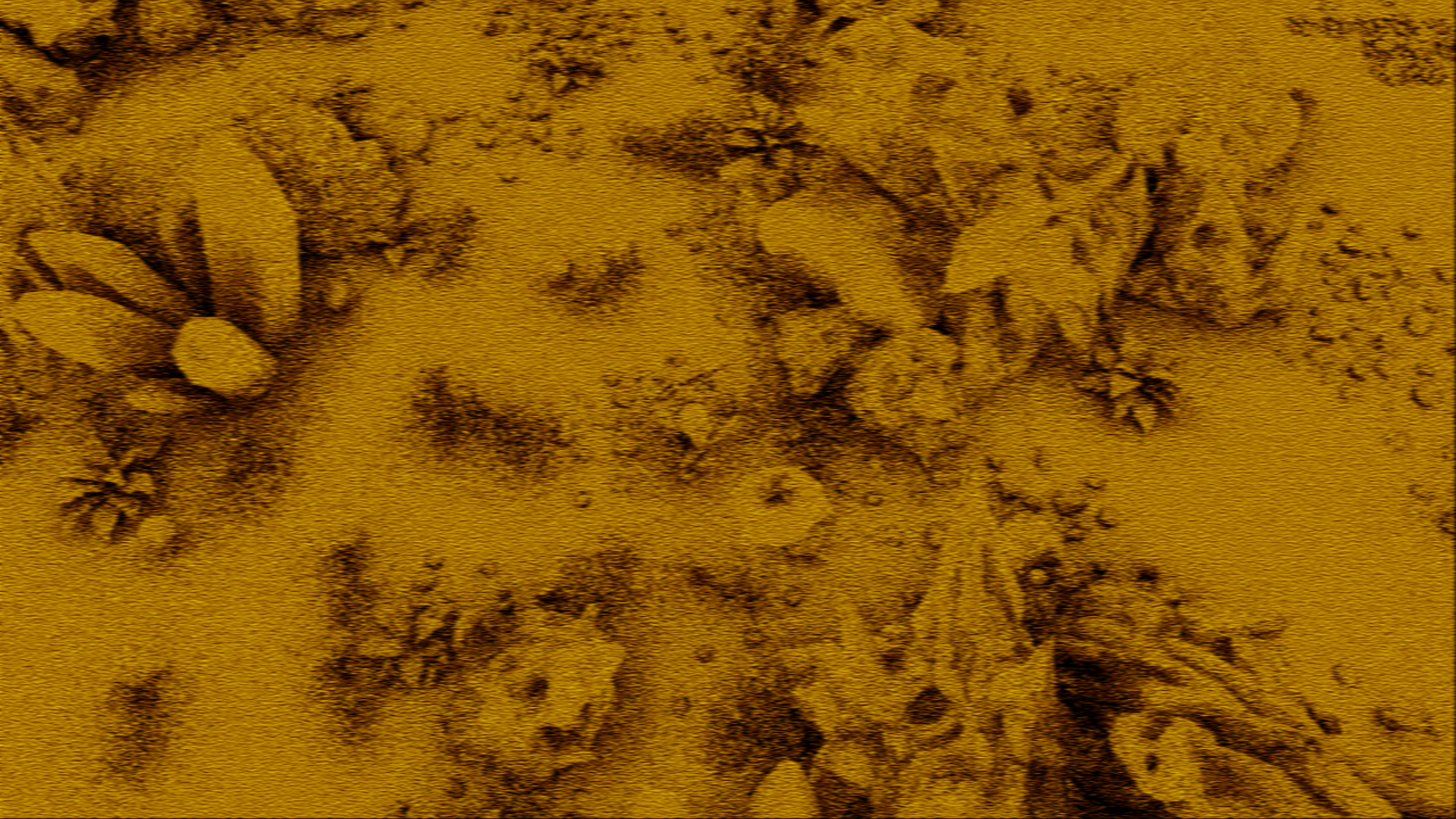

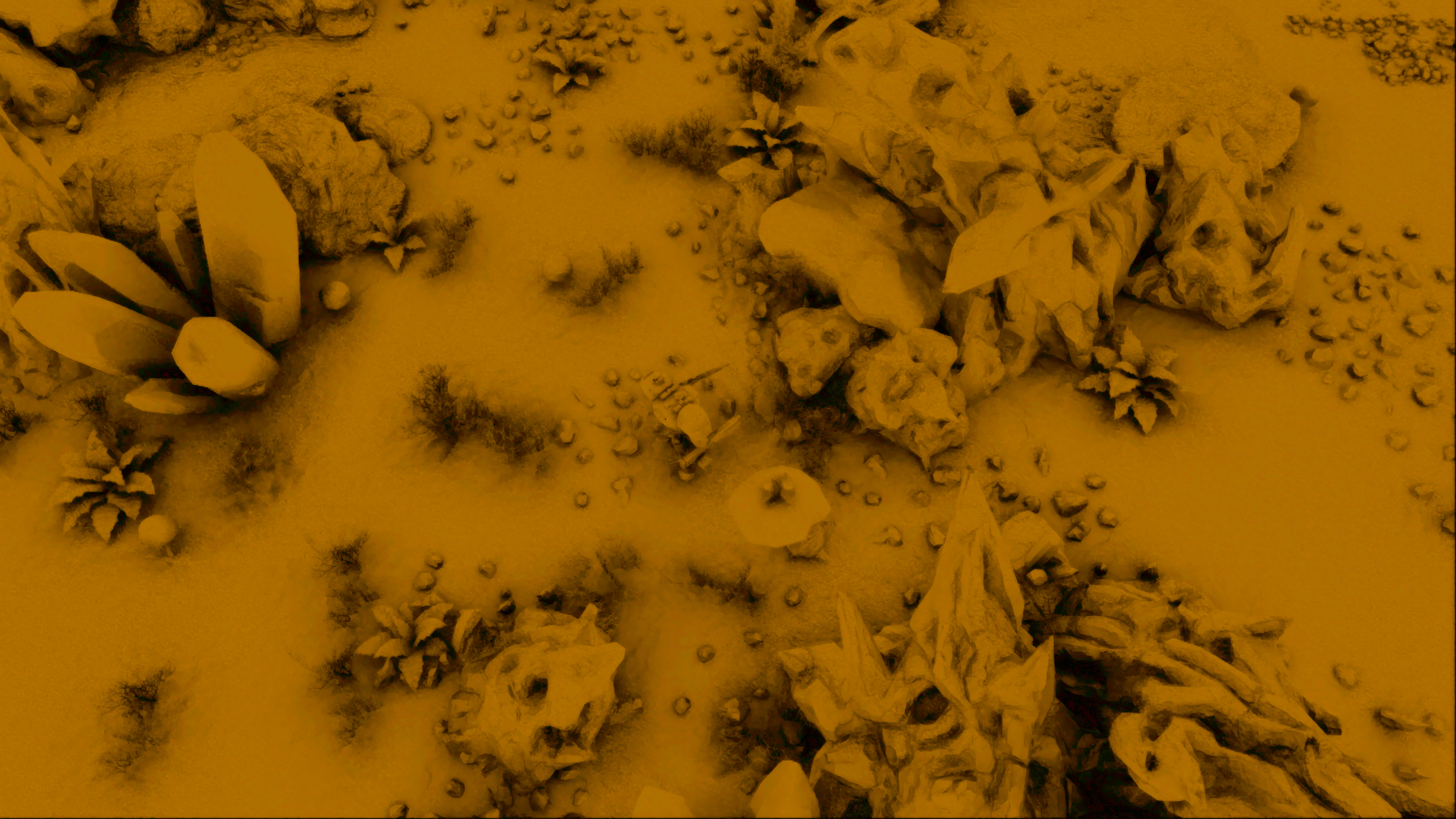

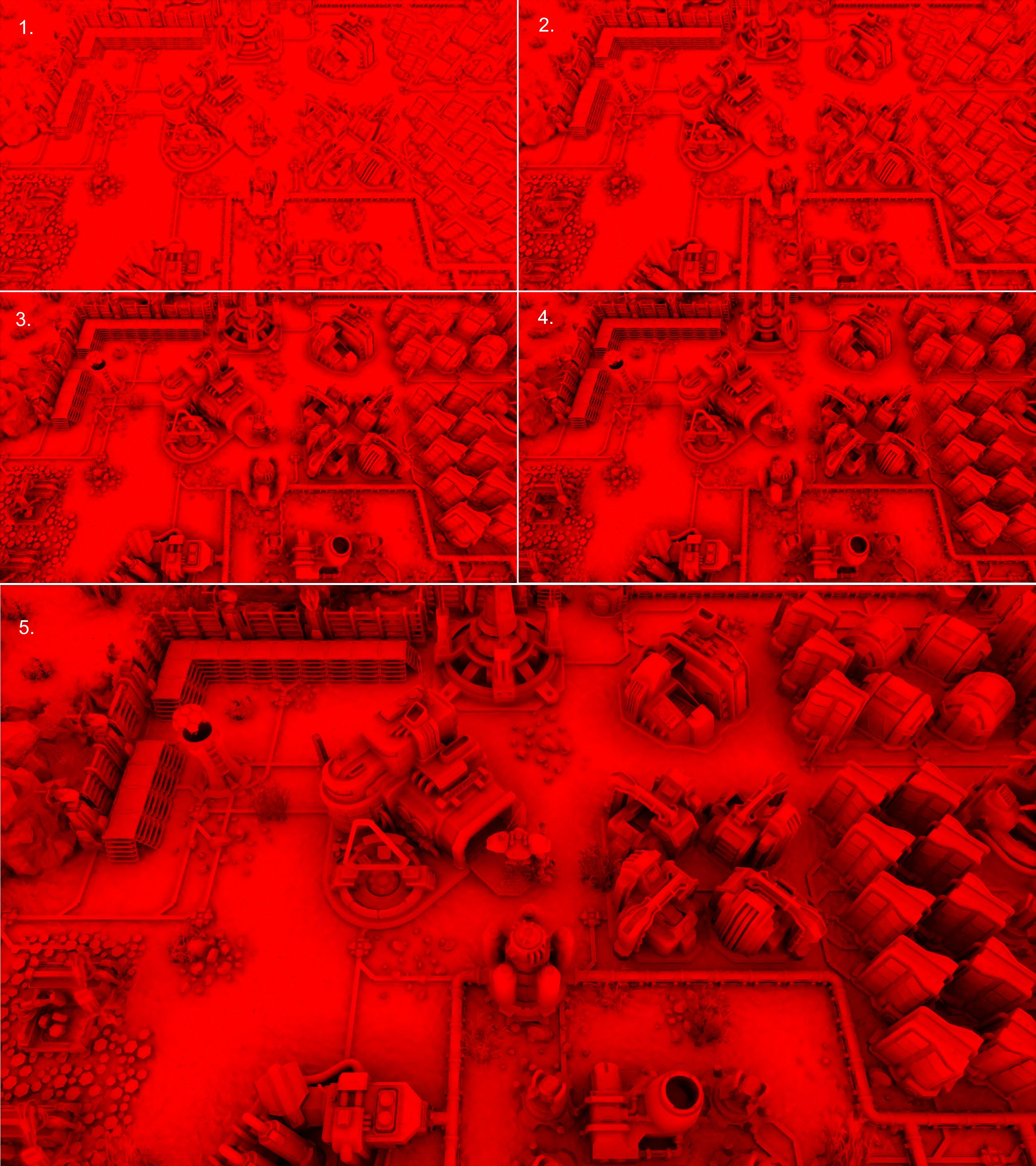

A comparison of the occlusion map pre- (above) and post-denoising (below). Combining the data from many frames allows us to present a good approximation of a much more computationally taxing global illumination.

A comparison of the occlusion map pre- (above) and post-denoising (below). Combining the data from many frames allows us to present a good approximation of a much more computationally taxing global illumination.

Raytraced ambient occlusion requires us to prepare acceleration structures, just like in the case of raytraced shadows (more information about acceleration structures in our previous article). There were two methods to choose from: we could either try and reuse our top-level acceleration structure that we prepared for shadows, or make another one specialized only for tracing the ambience. Luckily, the acceleration structure that we prepare for raytraced shadows contains all the information we need. For the raytraced ambient occlusion technique, we require data about all objects in the camera frustum and an additional 4 meters margin beyond that. All of this is contained within the top-level acceleration structure for raytraced shadows, therefore we were able to reuse it. This fact saves us a lot of computation time every frame.

Since the HBAO algorithm does not have the necessary radius of interest it is impossible for the algorithm to calculate the occlusion in areas demanding high precision, such as dense forest areas. Click the GIF for a hi-res YouTube video.

The process of generating an ambient occlusion mask is very similar to raytraced shadows. The only difference is that the raygen shader doesn’t shoot its rays directly from the surface towards the light source. Instead, the rays from each surface cast rays towards a randomized point on the surrounding hemisphere.

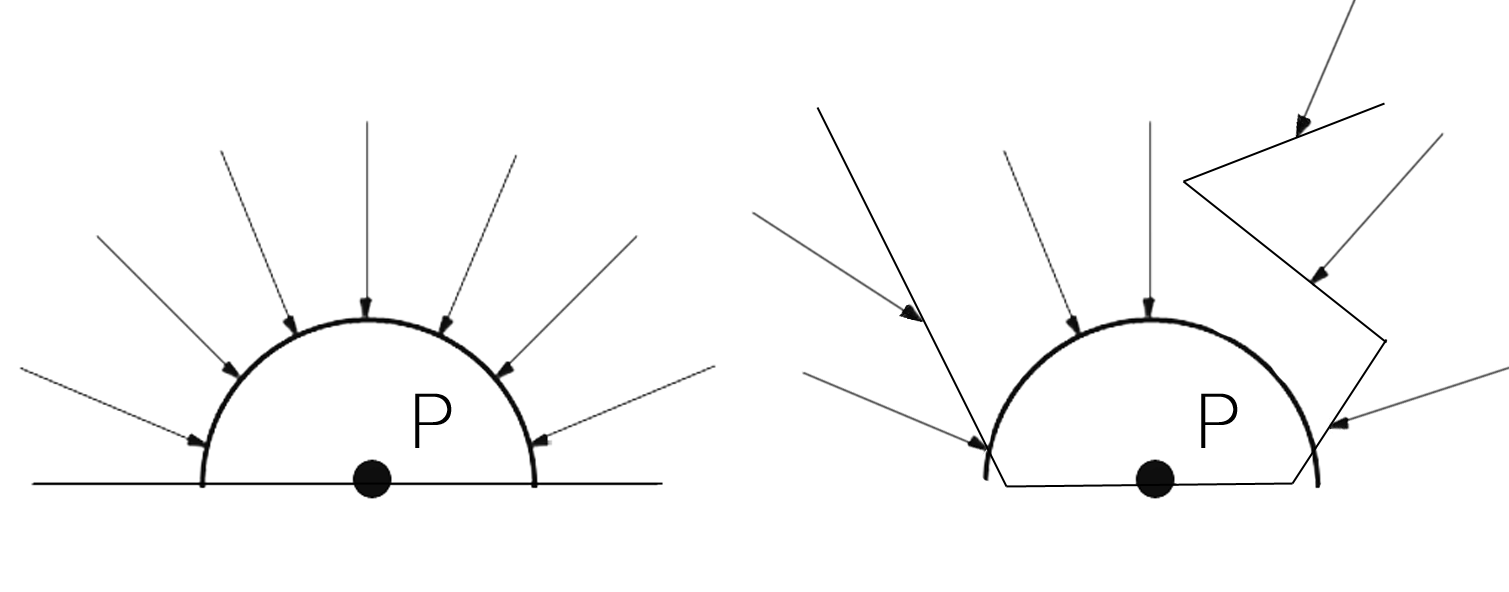

The ‘P’ point to the left is reached by 100% of light rays. The ‘P’ point to the right is being occluded by the geometry of the environment that blocks a part of the light rays that could otherwise reach the hemisphere around that point.

Every frame the direction of the ray changes, aiming at a different point on the hemisphere. The direction is randomized using the denoiser’s algorithm. If the ray hits an object, it means that the surface is occluded. Naturally, if we cast only one ray and relied solely on that data the resulting image would be grainy and not accurate. With the use of temporal denoising techniques, we reconstruct a full quality image by changing the direction of the rays in every frame.

The top-down character and the outdoor setting of The Riftbreaker makes the game a good fit for this kind of ambient occlusion technique. A large portion of rays cast from surfaces shoot directly upwards, hitting nothing as a result. In such cases we immediately apply the MissShader that ends the raytracing sequence, skipping unnecessary calculations and freeing the GPU resources up. We set the length of each ray to exactly 4 meters, taking into account the characteristics of our game. Thanks to this we achieve clearly visible shading in severely occluded areas. The rays that are cast towards the horizon have a chance to hit an obstacle and those are the ones that we follow from start to finish. The fewer intersections we have to check, the better for the render time.

A comparison between the AO map at 100% resolution (above) and 50% resolution (below). The difference is barely noticeable when looking at a still image and becomes negligible in motion, allowing us to save a lot of performance. Click the images for full resolution.

A comparison between the AO map at 100% resolution (above) and 50% resolution (below). The difference is barely noticeable when looking at a still image and becomes negligible in motion, allowing us to save a lot of performance. Click the images for full resolution.

Usually, the biggest problem for raytraced ambient occlusion is ray divergence. Each Ambient Occlusion ray is cast in a different direction and the GPU cannot effectively group its tasks, because all of them are different from each other. However, since the occlusion map is very stable, we could get away with reducing its resolution by up to 50% and as a result, limiting the number of rays necessary to create the AO map by four times. The difference in quality is barely visible, as the denoiser can reconstruct the image well, even with the limited amount of data available.

During the implementation of raytraced ambient occlusion into The Riftbreaker, AMD sent us a brand-new version of the denoiser, optimized for our game. It makes use of two specific features of our game: we do not cast more than one ray per frame for any given technique and the result of a raytrace is a simple hit/miss. That gave us improved visual fidelity, as well as boosted performance. It is undeniable that enabling RTAO has an impact on the rendering time, but we did as much as we could in order to minimize the FPS decrease and deliver an improvement in visual quality that is worth it.

In order to measure how much raytraced ambient occlusion affects the real-life performance of the game, we sampled several example scenes in the game. They vary in the number of small objects on the scene, as well as the time of day (due to the angle of the directional lighting mornings and evenings are more demanding for raytracing – longer shadows). We measured the performance of the game while using our standard rasterization techniques (Horizon Based Ambient Occlusion (HBAO) and Percentage-Closer Soft Shadows (PCSS)), the raytraced ones (RT shadows, RTAO), as well as combinations between these two groups. The results are as follows:

| SAMPLE SCENE 1 | SAMPLE SCENE 2 | SAMPLE SCENE 3 | SAMPLE SCENE 4 | |

| HBAO + PCSS | 349 FPS | 209 FPS | 194 FPS | 157 FPS |

| RTAO + PCSS | 299 FPS | 195 FPS | 207 FPS | 163 FPS |

| HBAO + RT SHADOW | 343 FPS | 201 FPS | 190 FPS | 160 FPS |

| RTAO + RT SHADOW | 290 FPS | 170 FPS | 146 FPS | 125 FPS |

Taking the HBAO + PCSS performance as a baseline, we can see that enabling either raytraced shadows or raytraced ambient occlusion on its own reduces the number of frames per second by anywhere from 30 to 40 percent. However, enabling both RT shadows and RTAO at the same time does not increase the rendering cost as much as one would expect. Instead, we observe a 10-25% further decrease compared to our baseline. The reduced cost of enabling the second raytracing technique is due to the fact that both raytracing techniques utilize the same acceleration structures and parts of the denoiser. Additionally, instead of carrying out all the steps for these two techniques individually, we carry out all the operations simultaneously, leading to much better performance.

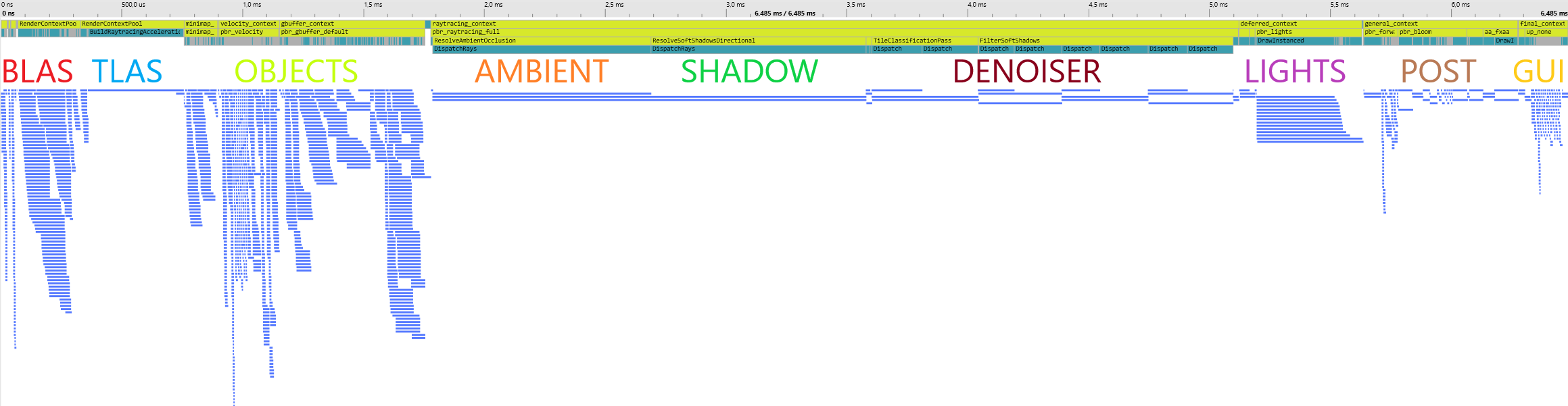

Capture from the PIX profiler shows the exact timing of all the rendering stages for a frame. We aim to parallelize as many operations as possible in order to minimize the output time. Click the image for full resolution.

As mentioned before, the acceleration structure for raytracing is prepared with a 4-meter margin to account for objects that may cast shadows, but are located out of the camera frustum. This is relevant to raytraced ambient occlusion, as it sets the maximum sphere radius for AO raycasting to 4 meters. While working on this technique we tested it with various values of this parameter. The visual and performance results were as follows:

0.5m – 183 FPS

0.5m – 183 FPS

1.0m – 183 FPS

2.0m – 181 FPS

3.0m – 178 FPS

4.0m – 175 FPS

In the end, the performance gain from using values lower than 4 meters was negligible. On the other hand, the visual fidelity gained from the extra raycasting range proved to be big enough for us to choose the maximum value as our default.

This is not the end of our work on expanding the raytraced rendering capabilities of the Schmetterling engine. We see great potential in researching tile-based adaptive light shadow calculation to support a much larger number of dynamic shadow casting lights on screen. Another area of our active research is also in adding support for a hybrid combination of raytraced and screen space reflections. We will be sure to share the results of our work in these fields in the future.

We feel that the raytraced shading techniques will continue to develop. As more hardware manufacturers and software developers start adopting these techniques, new optimizations will follow, reducing the performance cost. We think that we are still at the beginning of this new era of real-time computer graphics rendering. Raytracing has the potential to replace the traditional rasterization approach and become an industry standard in a couple of years. If it does, then gamers around the world are in for a treat, as more and more titles release with the level of graphics we could have only dreamt about just a couple of years before. Until then, there is still much work to do and we are glad to have made our contribution to this process.

The raytraced ambient occlusion implementation in The Schmetterling engine has been developed by Andrzej Czajkowski at EXOR Studios. We would also like to thank the great team of engineers at AMD, with special acknowledgement to Marco Weber, Steffen Wiewel and Dominik Baumeister who helped us to optimize our implementation.

<!–

Extra Div –>