The goal of this series is to provide intuition for, and a deep understanding of, Neural Networks by looking at how tuning parameters can affect what and how they learn. The reader will need some prior knowledge of Neural Networks.

So you’ve tried all sorts of activations, architectures, learning rates, intializations, batch sizes etc. and your model still isn’t learning… It could simply be you haven’t normalized your data!

In this first part of the series we will see just how much of an effect normalizing your data can have on your Network’s ability to learn.

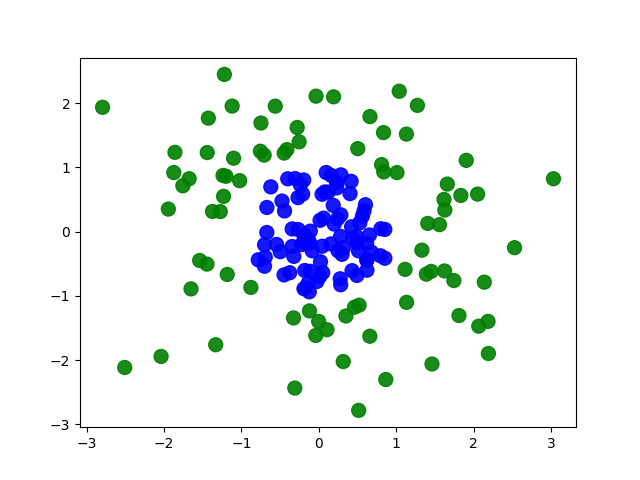

We start with a classification task involving two concentric circles

We generated the data with the following rule: if x[0]² + x[1]² ≥ 1 then Y = 1 (in green) otherwise Y = 0 (in blue). Our goal is to discover a good approximation to this rule from the sample we’ve generated. To accomplish this task we set up a simple Neural Network with a single hidden layer containing 2 neurons:

model = keras.models.Sequential()

model.add(layers.Dense(2, input_dim=2, activation=SOME_ACTIVATION))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss="binary_crossentropy")

We can visualize the Network as such:

Note: We use the sigmoid activation for the last layer and binary_crossentropy loss in order to leverage intuition from Logistic Regression where the decision boundary between our two classes will be a linear function of h0 and h1. Recall, if we don’t activate the hidden layer then h0 and h1 are linear functions of x0 and x1, meaning we are back to traditional Logistic Regression.

For now we will activate our hidden layer using ReLU (we will look at activation functions in depth in Part II). While training our model above, we can plot the decision boundary at regular intervals through the learning process to get the following animation:

ReLU seems to give us access to linear pieces with which to approximate the decision boundary. Recall our Network is performing Logistic Regression on the learned features h0 and h1 so we can plot the decision boundary in the learned feature space:

For the most part it seems that when h0 is activated, h1 isn’t and vice versa. When h0 or h1 is activated, it outputs the linear combination of the x0 and x1 that activated it. We can intuit that our Network is linearly separating the portion of the data that makes h0 activate and the portion of the data that makes h1 activate.

Recall that the gradient responsible for nudging a set of weights associated with a neuron is proportional to the activation of the neuron. When the activation is 0 for a ReLU-activated neuron, the gradient for the weights associated with that neuron is 0. If the neuron’s activation is 0 for the portion of the data used to estimate the loss then the weights are not updated during BackPropagation.

The number of neurons that activate can then have a large impact on what our Network is able to learn. It could be possible for example that none of our data activate any of the neurons at initialization. That would be bad as our Network would not be able to learn.

How likely is this to happen?

Reading the Keras documentation we see that the default initialization method is to generate weights uniformly at random on the interval [-L, L] where L depends on the layer.

Let’s keep using the Network above and assume that all weights come from U(-1, 1). If x[0] and x[1] each also comes from U(-1, 1), what is the distribution of the number of activated neurons?

On average it seems we can expect half the neurons to be activated. But by which proportion of the data?

We saw above our Network is linearly separating the portion of the data that makes h0 activate and the portion of the data that makes h1 activate. If no portion of the data activates h1 then we are back to Logistic Regression. How likely is that to happen?

The distribution of the proportion of our data that makes a given neuron activate at initialization is tough to compute so let’s run a simulation:

So we have a good chance that about half our data, for this particular Network, will activate a given neuron after initialization — each neuron getting a random 50% chunk of our data. This seems to be due to the symmetry of our data around 0.

What happens if our data is not centered around 0? Here is an animation of the distribution of the proportion of data responsible for activating a given neuron as our data shifts from U(-1, 1) to U(8, 10)

As our data shifts farther from 0, neurons activate based on either all the data or almost none of it after initialization. This is exactly the situation we want to avoid.

If a neuron only activates for a very small portion of our data then its associated weights will rarely, if ever, get updated. If the neuron activates on all our data then the neuron is doing global logistic regression. All such neurons will end up learning the exact same set of weights because they are trained on the same portion of our data (all of it).

Such a Network then has a good chance of just doing standard Logistic Regression. This is the case for all neurons so adding more layers and neurons won’t help us.

Does centering around 0 affect the distribution of the number of activated neurons?

So we can still expect about half the neurons to be activated — but this time by close to all or none of our data.

For each data point we feed to our network, we can visualize the activations of each neuron. Below are the activations right after initialization (as above the hidden layers — in green — use the ReLU activation and the last layer is using sigmoid):

We see a lot of movement and variability because each neuron has access to a random portion (which we expect to be around 50%) of our data. How much overlap there is between these portions for any two neurons is random (in fact U(0, 1/2)). Here is what the network looks like after a few epochs:

And the animation of the learning process:

Now take a look at what happens when the data is unnormalized! For data centered at [10, 10], here is the network after initialization:

The network seems frozen. And after a few epochs of training this does not improve because it requires finding weights that only activate portions of the data which we’ve established has very low probability of occuring:

We haven’t learned anything new… Here is the animation of the learning process:

So is this just a ReLU issue? Or can we use a different activation function to bypass the learning dead-end that our unnormalized data has created?

Most activations activate based on some threshold centered around an arbitrary point (like 0, 1/2, or 1). So we can expect the same behavior from other activation functions.

Here are the activations after initialization for the same Network as above but using the sigmoid activation for all the hidden layers:

And after training:

There doesn’t seem to be much of a difference between the activations at initialization and the activations after learning. We can plot the decision boundary through the learning process to see what was learned:

It looks like our model is still just doing Logistic Regression.

So look at the data before trying all sorts of activation functions, intialization methods, architectures etc. Normalizing your data is not just good practice in order to leverage the power of Neural Networks — it’s basically a requirement.