Today’s artificial intelligence technology is intended to mimic nature and replicate the same decision-making abilities that people develop naturally in a computer.

Artificial neural networks, like living brains, are made up of many individual cells. When a cell becomes active, it transmits a signal to all other cells in the vicinity. The following cell’s signals are added together to determine if it will become active as well. The system’s behavior is determined by the way one cell influences the activity of the next. These parameters are modified in an autonomous learning process until the neural network can accomplish a certain task.

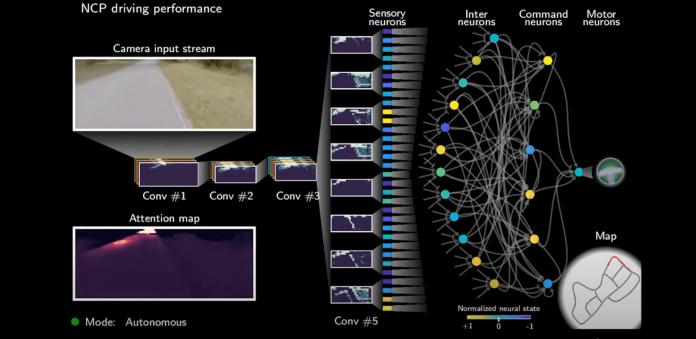

Inspired by biological neurons, a group of researchers from MIT CSAIL, TU Wien (Vienna), and IST Austria has created a novel AI system called Neural Circuit Policies (NCPs) that is based on the brains of small creatures like threadworms. With just a few artificial neurons, this revolutionary AI system can control a vehicle.

The system’s networks are sparse, meaning that not every cell is linked to every other cell, which simplifies the network. According to the researchers, the system can handle noisy input significantly better, and its manner of operation manner can be explained in detail due to its simplicity.

Putting Ideas To Test

The team chose a particularly significant test objective to put the new ideas to the test: self-driving automobiles staying in their lane. The neural network gets road camera photos as input and must automatically decide whether to steer to the right or left.

Most deep learning models use millions of parameters to learn complex tasks like autonomous driving. The new method, on the other hand, shrinks the networks by two orders of magnitude. The team used only 75,000 trainable parameters in their systems.

The new system is divided into two parts: a convolutional neural network that first processes the camera input. It just extracts structural features from input pixels by processing visual data. This network determines which elements of the camera view are relevant and intriguing and then sends signals to the network’s most significant component – a “control system” that drives the car.

Both subsystems are stacked and trained at the same time. The team collected many hours of traffic videos of human drivers in the Boston area and fed them into the network, along with information on how to steer the car in any given situation. This was done until the system learned to connect images automatically with the appropriate steering direction and handle new situations independently.

The control element of the system converts the data from the perception module into a steering command and consists of only 19 neurons, which is much smaller than what prior state-of-the-art models could have achieved.

An actual autonomous vehicle was used to test the new deep learning algorithm. They explain that this approach can be used to understand where the network spends its attention while driving. The new networks concentrate on two areas of the camera image: the curbside and the horizon. The findings demonstrate that the involvement of each cell in any driving decision may be determined, and individual cells’ functions and behaviors can be deciphered. The researchers state that achieving this level of interpretability is not possible for larger deep learning models.

Robustness

The team altered the input images and tested how well the agents could deal with the noise to see how resilient NCPs are compared to previous deep models. They notice that while other deep neural networks find this to be an intractable difficulty, the NCPs showed strong tolerance to input artifacts.

The proposed approach offers two significant advantages: interpretability and resilience. In addition to these, the researchers state that the new methodology can cut training time and make AI work in relatively simple systems.

The NCPs enable imitation learning in various scenarios, ranging from automated warehouse operations to robot movement. The team believes that biological nervous system computation principles can become a tremendous resource for constructing high-performance interpretable AI.

Paper: https://www.nature.com/articles/s42256-020-00237-3

Github: https://github.com/mlech26l/keras-ncp

Source: https://www.csail.mit.edu/news/new-deep-learning-models-require-fewer-neurons