Artificial intelligence (AI) helps organizations to make timely and accurate decisions from data in almost every field of study.

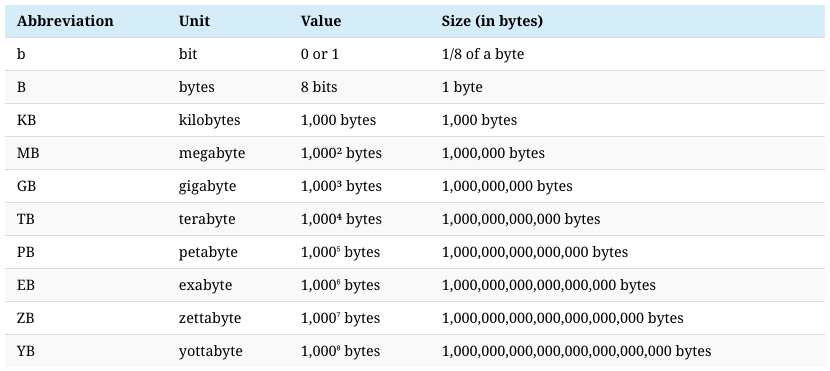

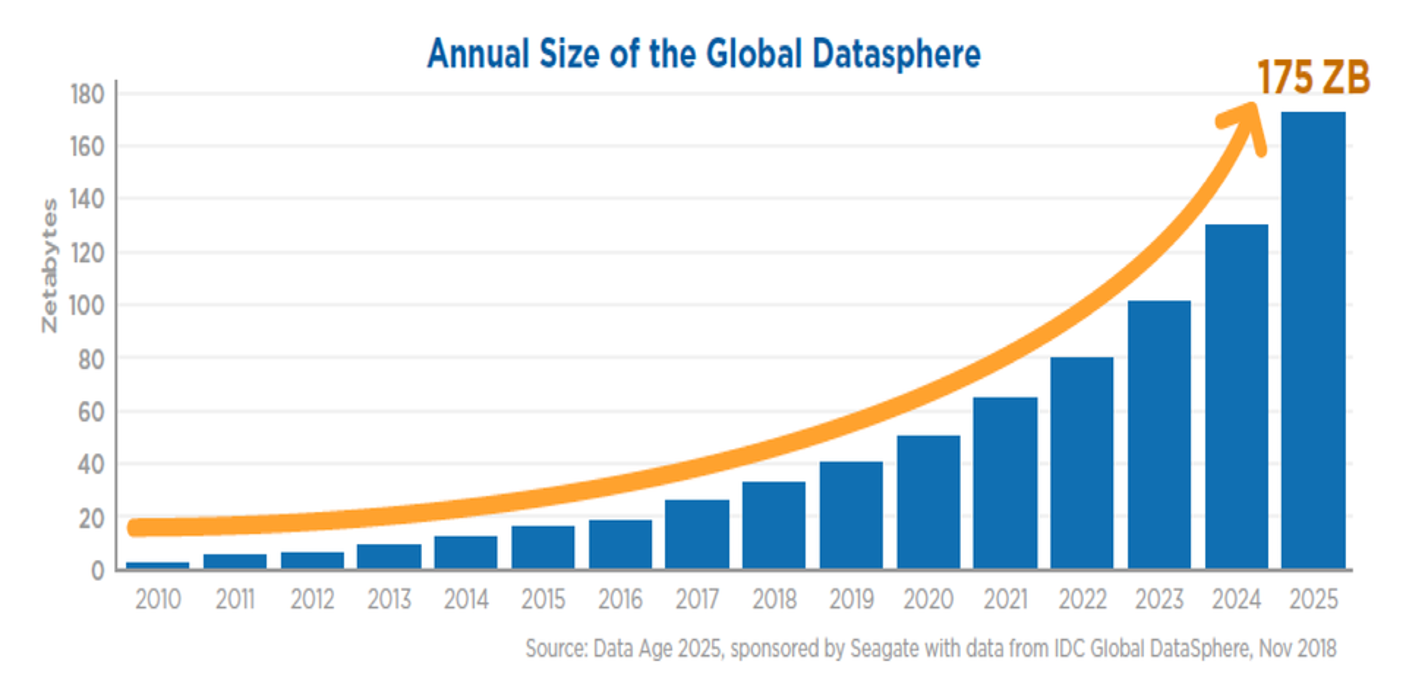

The volume of data keeps growing. Statista believes that 59 Zettabytes were produced in 2020 and that 74 Zettabytes will be produced in 2021.

A Zettabyte is a trillion gigabytes!

The WEF illustrate the size of this data with the following chart shown below:

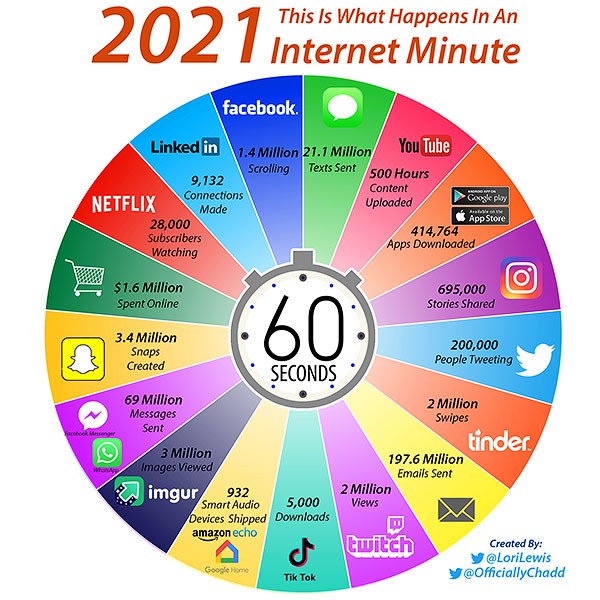

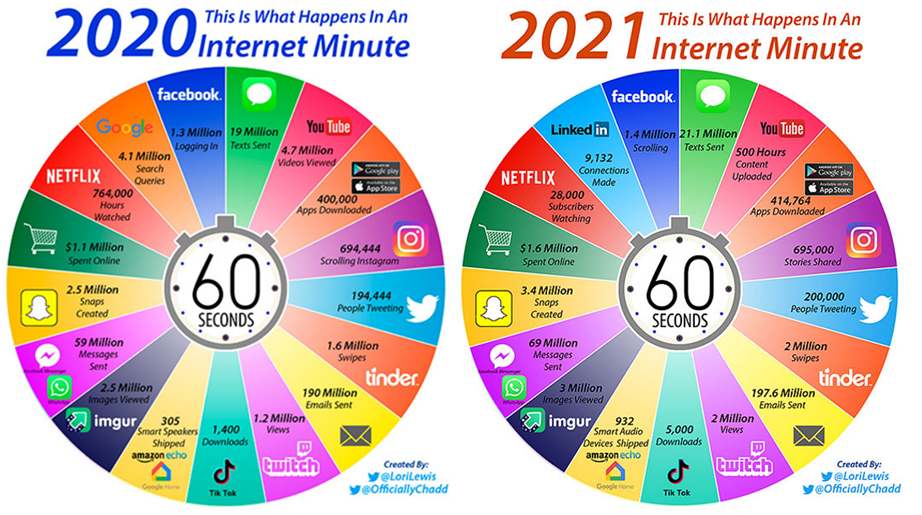

Lori Lewis produced the following infographic for 2021

A side by side comparison with 2020 and 2021 is fascinating to see the continued growth in digital data volume

Source for image above Doug Austin Here is Your 2021 Internet Minute by, Lori Lewis

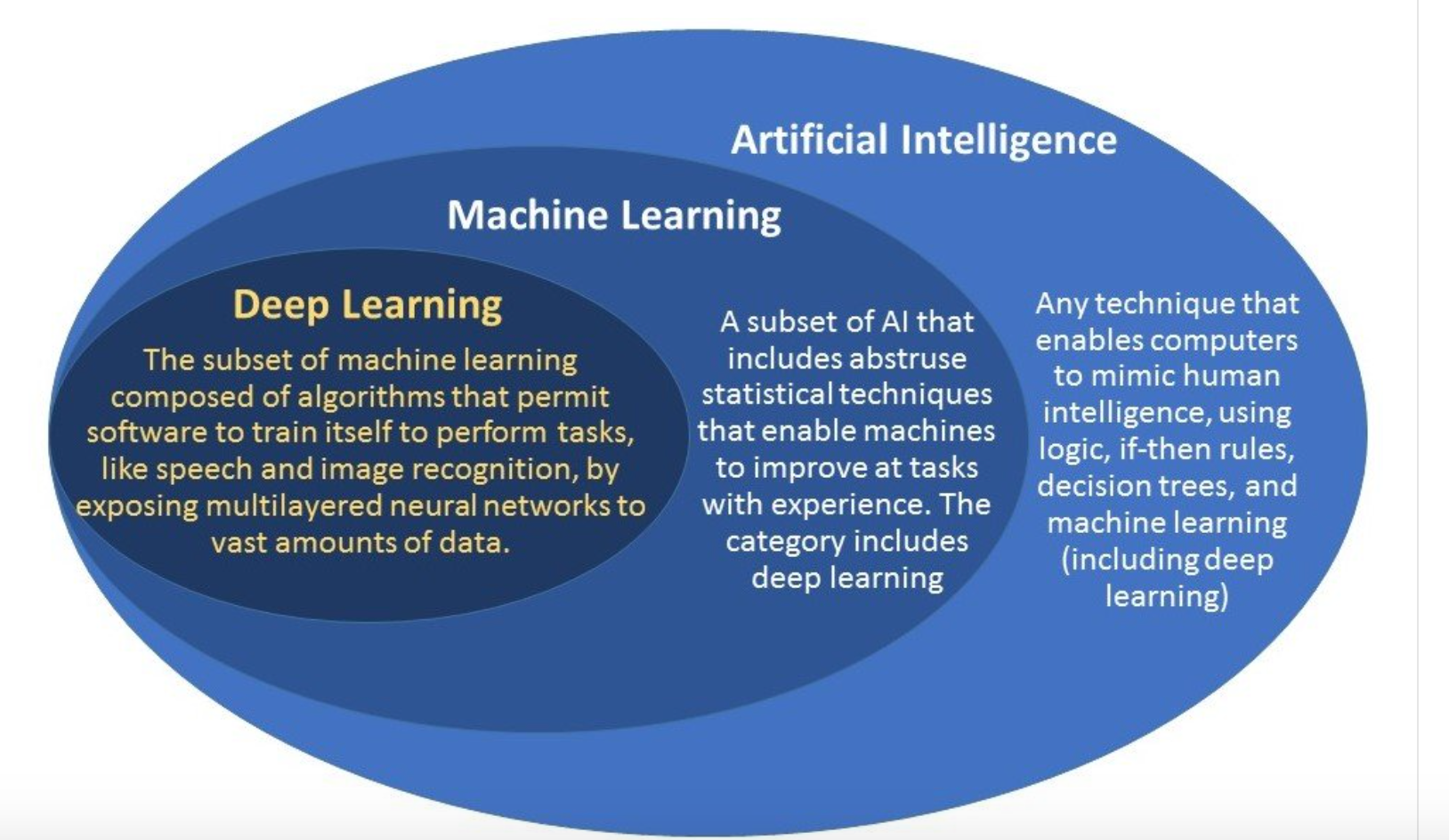

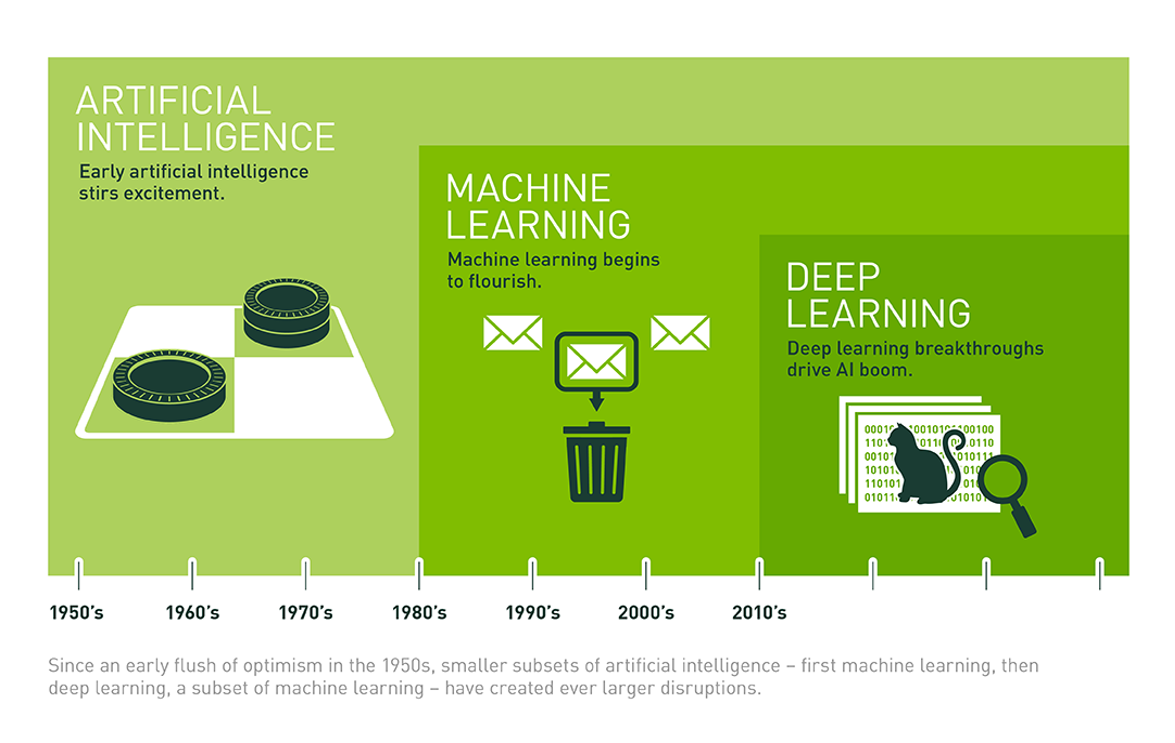

Artificial Intelligence (AI) deals with the area of developing computing systems which are capable of performing tasks that humans are very good at, for example recognising objects, recognising and making sense of speech, and decision making in a constrained environment. It was founded as a field of academic research at the Dartmouth College in 1956.

Source for image above Meenal Dhande What is the Difference between AI, Machine Learning and Deep Learning? Geospatial World

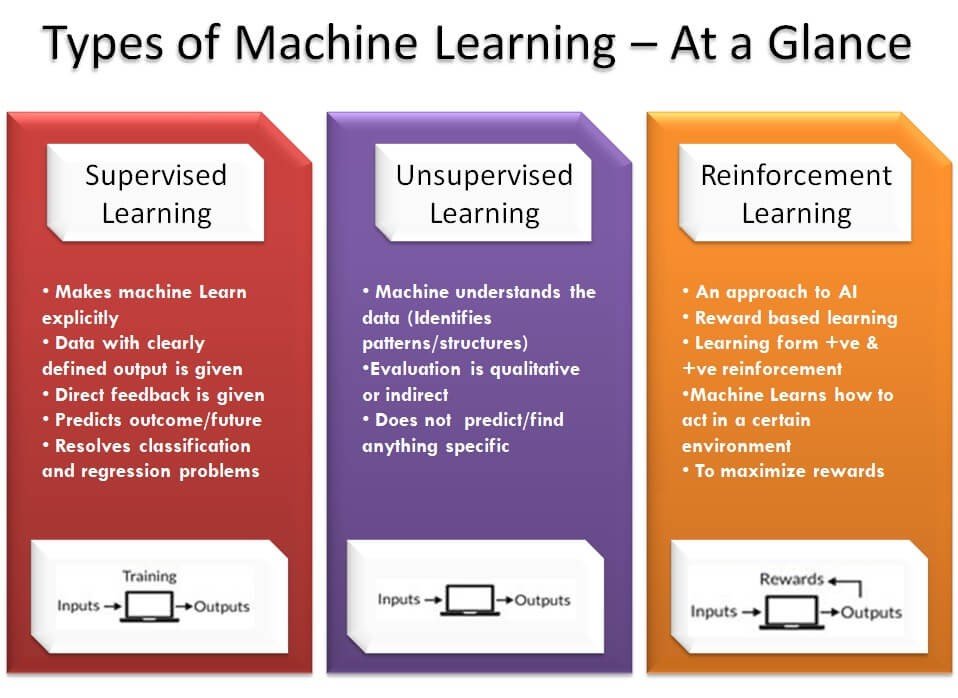

Machine Learning is defined as the field of AI that applies statistical methods to enable computer systems to learn from the data towards an end goal. The term was introduced by Arthur Samuel in 1959.

Neural Networks are biologically inspired networks that extract abstract features from the data in a hierarchical fashion. Deep Learning refers to the field of neural networks with several hidden layers. Such a neural network is often referred to as a Deep Neural Network.

Source for image above: Michael Copeland What’s The Difference Between AI, Machine Learning And Deep Learning, NVIDIA Blogs

The reason for the advance of AI over the last decade relate to the following:

- Growth in data as the internet and in particular smart mobiles have rapidly grown;

- Linked to the above is the arrival of 4G enabling more smart phone apps including digital media to take off such as Facebook, Instagram, Twitter, Tik Tok, and ecommerce with Amazon, AliBaba, ebay, etc.

- The reduced cost and increased availability of storage with the rise of the cloud;

- Graphical Processing Units (GPUs) that allow for parallel processing; providing for Deep Learning to be more scalable;

- Advances in techniques such as the arrival of new Boosting algorithms (see section below) and in particular the advance of Deep Neural Networks as researchers developed techniques to regularise networks and prevent overfitting.

Much of the revolution in AI over the past decade has been driven by Machine Learning and Deep Learning. Techniques such as XGBoost, Light Gradient Boosting Models (Light GBM) and CatBoost have proven highly effective with supervised learning and structured datasets. Deep Learning has been particularly effective with unstructured data. And in particular Deep Neural Networks have been leading the way in relation to Computer Vision with Convolutional Neural Networks (CNNs) and more recently Vision Transformers (ViT) including for GANs and with Natural Language Processing (NLP) applied to text analytics and speech to text using Transformers with Self-Attention. Transformer models have also made headway in recent times with chemistry, biology and drug discovery problems, one example being AlphaFold2 that solved the challenge of protein folding and Time Series with Temporal Fusion Transformers for Multi-Horizon Time Series Forecasting and in the field of gaming and robotics the rise of Deep Reinforcement Learning (DRL) plus hybrid AI techniques with potential such as Deep Neuroevolution and NeuroSymbolic AI that is seeking to combine Deep Neural Networks with Symbolic AI.

A key point is that the era of big data analytics has also been the era of big tech domination of the AI space. The big tech majors have most of the access to the vast data being created and many of the strongest AI teams.

Gartner reported that as many as 85% of Machine Learning and Data Science projects fail, VentureBeat reported 87% of Data Science projects never make it to production and Gartner went on to state “Through 2022, only 20% of analytic insights will deliver business outcomes”. Many of these failures are occurring outside of the Tech giants who have strong Data Science teams, access to large amounts of data and strong engineering teams on the backend and front end side to successfully implement Data Science projects. Crucially the Tech majors also possess an organisational culture that generally enables AI researchers and Data Scientists to flourish. There is greater understanding from the C-Team and the Tech majors view AI and Data Science as a revenue generator. For example Amazon has stated that 35% of its sales came from recommendation algorithms and AliBaba achieved record sales of $75.1Bn for Singles Day with an AI algorithm deployed to provide targeted personalisation.

Gartner view the three key reasons for failing with AI as the following:

- Availability and quality of Data;

- Quality of, and investment into, the Data Science team;

- An agreed and clear objective between the Data Science and Business teams on the goal of the Machine Learning and Data Science projects – this was viewed as the main issue.

Do we end up with AI and Data Science being dominated by a few firms? A cynic may state that the Tech majors would be happy with the current state of dominance of Big Data and AI. However, the emergence of 5G networks and a push by the likes of Apple and others towards the Edge will hopefully mean that we develop the next generation of AI that is more accessible and able to scale across the wider economy.

It is submitted that as the era of the Internet of Things (IoT) arises with standalone 5G networks in the 2020s exciting new product developments and services will emerge, likely be around 2024 -2025, with embryonic development from 2022 onwards as we start to generate data from truly standalone 5G networks and larger scale investment occurs (see section below on What is 5G?).

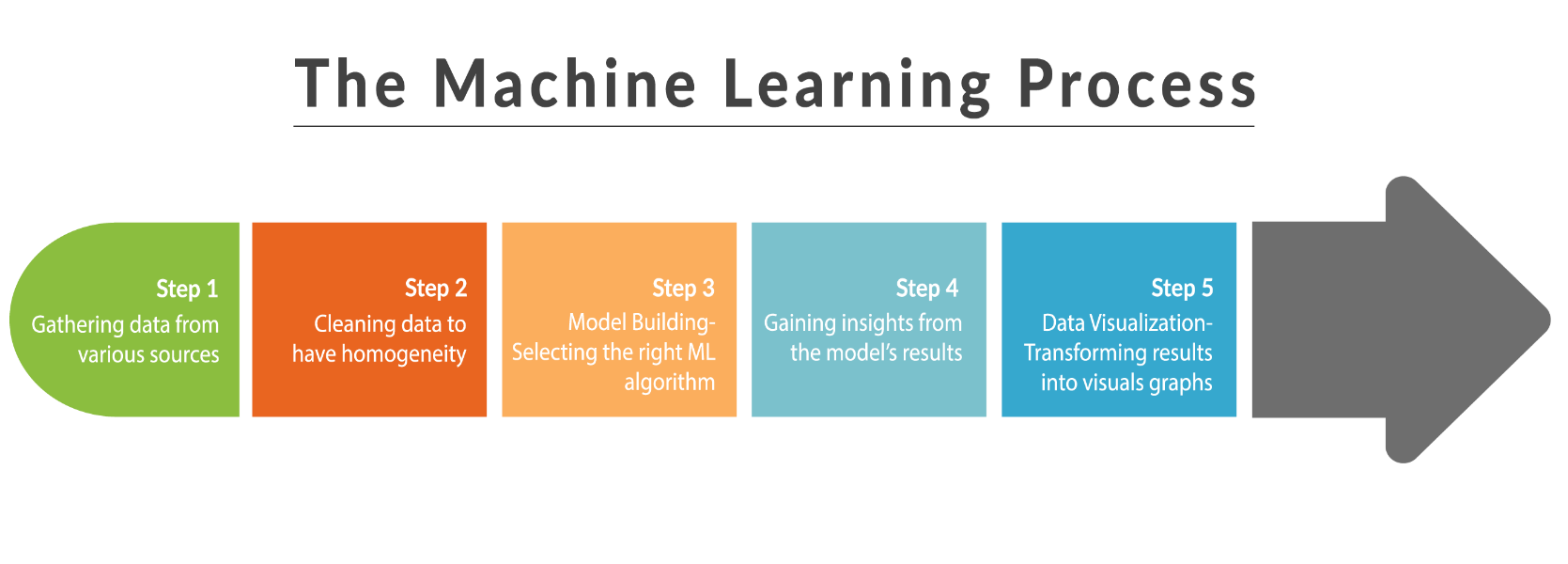

Supervised Machine Learning Requires Quality Data!

A challenge with Machine Learning and Deep Learning projects in a production environment is that they require large amounts of quality labelled data to perform well. The majority of our Machine Learning remains based upon Supervised Learning approaches.

Source for Image above Analytics Vidhya Artificial Intelligence Demystified

Data quality and availability is of key importance for Supervised Learning as we are training an algorithm based upon a labelled ground truth and if the data is low quality and incorrect in the training phase then the AI algorithm will learn garbage – Garbage In, Garbage Out (GIGO).

Source for Image above Analytics Vidhya Artificial Intelligence Demystified

It may be argued that finding and labelling vast amounts of data has been that much easier for the Digital space for social media and ecommerce firms. However, much of the growth in data later this decade will be in the Internet of Things (IoT) around the Edge of the network. Furthermore one hopes that the research in AI will focus increasingly on making Deep Neural Networks more computationally efficient (squeezing more out of less) and less about ever larger networks that are exciting in their own way but not really so relevant to most users and the overall economy. In addition AI research needs to focus on enabling Deep Neural Networks to learn on smaller data sets as a human child may learn what an apple is from a handful of examples and this is where we want to get AI to so that we can enable AI systems to learn on the fly and respond to dynamic environments. Nevertheless, legacy (non tech) firms can obtain a great deal of value from AI by keeping it simple and straightforward and targeting low hanging fruit at the outset rather than costly moonshots that may be out of their current state of capability. Once the organisation has successfully implemented an AI project it can then take that internal learning process and know how and scale to other projects and areas across the company.

A further problem that we face is that AI is a highly technical area with lots of terminology (jargon) with Data Science covering the fields of statistics, some mathematics and coding and this may sound like an intimidating alien language to the Business team. Equally the Data Science team often don’t have the domain knowledge of the business side and may spend many months building a technical solution with no business benefit.

However, if we are to scale AI to the rest of the economy and move beyond the digital media and Ecommerce space then we are going to have to improve the success rates with AI.

Examples of firms outside of Digital Media and Ecommerce who have had success with AI projects include the following:

Frankfurt, Germany, Sep 10, 2019: BMW Concept 4 Prototype Car at IAA, fully electric, fully connected, highly autonomous driving eco friendly future SAV car by BMW Editorial credit: Grzegorz Czapski / Shutterstock.co

Tesla has rocked the automotive world by being a tech and AI company!

Editorial credit: Mike Mareen / Shutterstock.com

Tesla Roadster standing on the viewpoint in Tenerife

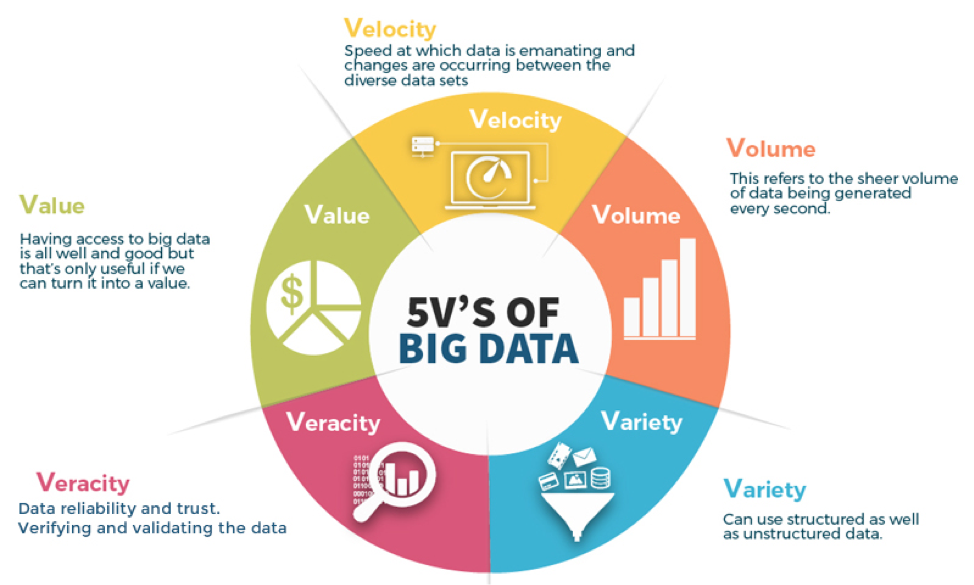

Increasingly firms need to ask how do we generate value with Big Data Analytics as noted in The Data Veracity – Big Data (source for image below)

Going forwards as standalone 5G networks emerge and scale around the world, data is only going to grow. It is safe to assume that “Big Data will only get much bigger!”

In fact, IDC Seagate predicts that by 2025 we will produce 175 Zettabyte of data by 2025 and that a vast 30% of this will be consumed real-time. This is almost as much data that we are producing in the entirety of 2021! In order to manage this deluge of real-time data we will need to apply state of the art (SOTA) Machine Learning and Deep Learning techniques.

Image Above: Source IDC Seagte, Tom Coughlin Forbes 175 Zettabytes By 2025

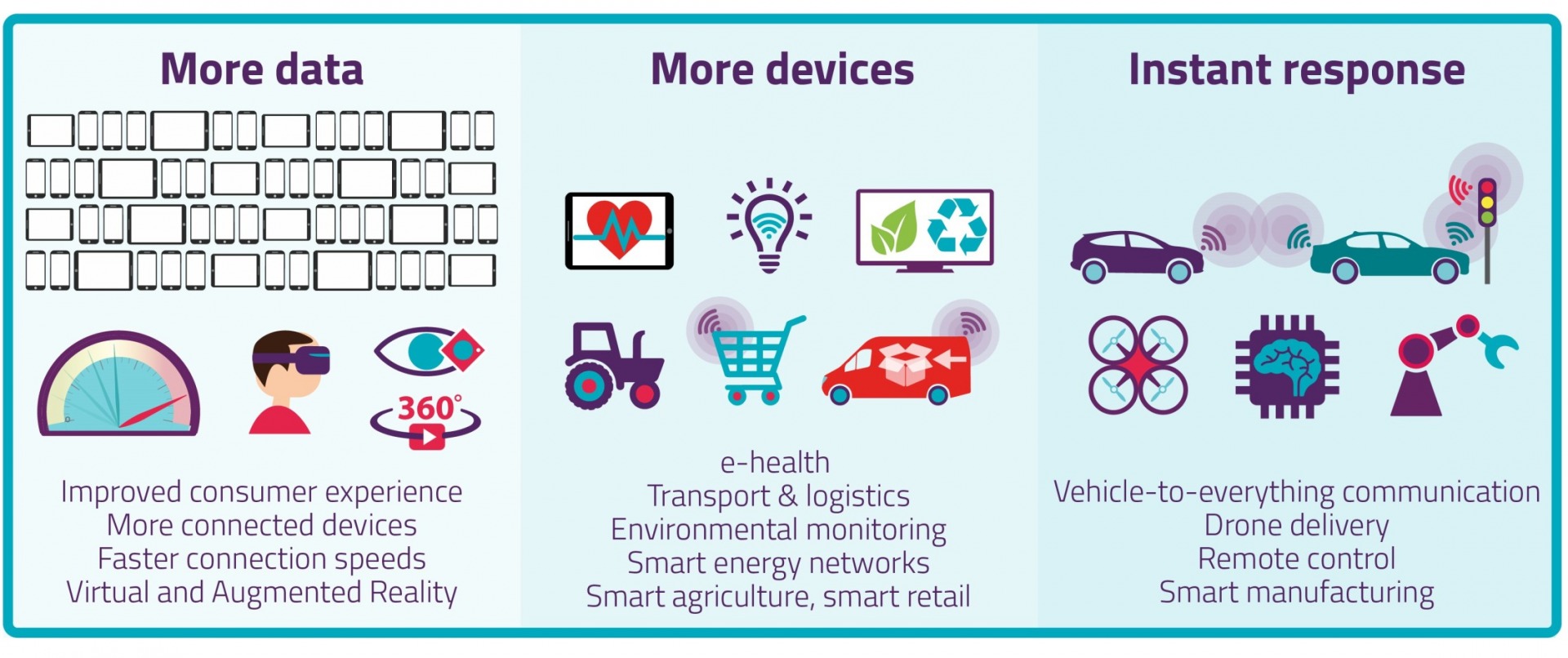

The UK telecoms regulator Ofcom produced an infographic shown below setting out the advantage of 5G networks in terms of services and products.

What is 5G?

5G is the new generation of wireless technology. As Ofcom states “5G is much faster than previous generations of wireless technology. But it’s not just about speed. 5G also offers greater capacity, allowing thousands of devices in a small area to be connected at the same time.”

“The reduction in latency (the time between instructing a wireless device to perform an action and that action being completed) means 5G is also more responsive. This means gamers will see an end to the slight delays that can occur, when games can take time to reflect what they’re doing on their controller.”

“But the biggest differences go far beyond improving the way we use existing technology like smartphones or games consoles. The connectivity and capacity offered by 5G is opening up the potential for new, innovative services.”

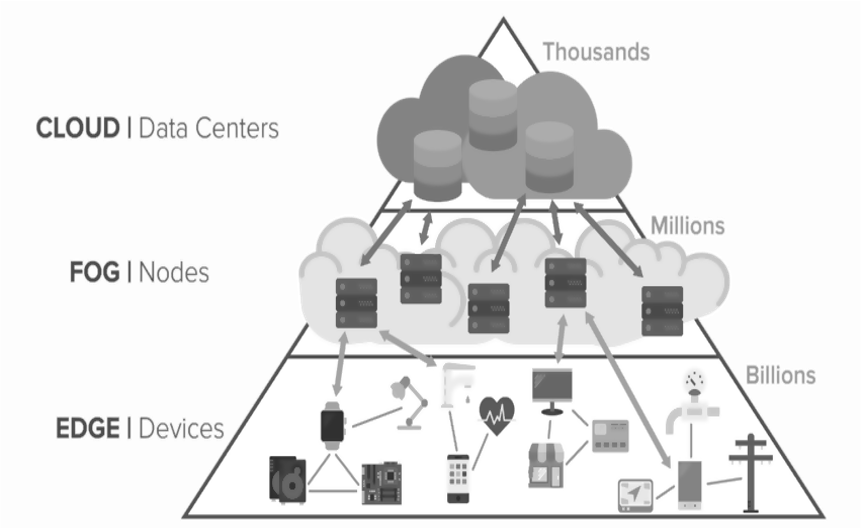

Crucially as much of this data will be created around the Edge (of the Network) on internet connected devices all around us there will be an increasing need for AI on the Edge (on the device itself) rather than on a remote cloud server so as to reduce the need for unnecessary traffic with the associated security risks, and the latency (time taken to transmit and receive messages between a back-end server and client front-end). This will be the era of revolutionary change whereby change may occur at a faster pace than anytime in human history.

Source: Image – University of Paderborn

Entirely new services and products will be created where our imagination is the limit. Equally in order to scale AI across the rest of the economy we will require new techniques of Machine Learning and hybrid AI enable the following:

- Explainability with model interpretability for areas such as healthcare, financial services, transportation and others;

- Causal reasoning and common sense;

- Deep Neural Networks that can train with smaller sets of data whilst retaining good performance – neural compression is key for the Edge!

- Move beyond Deep Learning inference on the Edge to AI that can learn on the fly and respond to dynamic environments (this will be key for autonomous systems);

- Generalise or multitask – whilst AGI remains an aspirate perhaps maybe entering the era of broad AI that sits in between Narrow AI and AGI;

- Energy efficiency so as to function in energy constrained environments such as the Edge where long life battery power is key and also carbon footprint can be reduced;

- The emergence of techniques such as Federated Learning with Differential Privacy that will be key to enabling Machine Learning to scale across decentralised data in sectors such as healthcare and financial services where data privacy is key.

Furthermore AI needs to be accessible to a wider audience including the C-Team of organisations. Moreover, organisations need to address their corporate and business strategies and organisational cultures to better align with tech initiatives including thinking like a tech company and viewing the potential for AI and Data Science to generate revenue or cost reductions and not just as a cost centre.

In addition the likes of European countries (including the UK) need to think seriously about what their AI and tech strategy is. One wants to safeguard the public yet at the same time not hamper innovation. Moreover, AI and tech in general is such a fast moving space that the legal regulation would need to be updated regularly. For example AI is a field of research and hence the R&D team may need the freedom to experiment, however, when it comes to production perhaps the industry should follow guideline standards as is the case with accounting and this would help establish best practices for ethics, diversity and ensuring that firms are using the appropriate standards for data preparation, selection, training and testing of algorithms as well as post production checks and updates. This may in fact also assist with reducing the failure rate for data science projects. Such standards could be set up with the tech firms, AI startups and regulators with an approved body responsible for administering them.

The set of articles that will follow will address the following:

- AI and organisational culture, corporate and business strategy and its role in value creation;

- AI and GDP plus job creation – dispelling the myth that AI will result in mass unemployment – at least in the 2020s;

- AI and data strategies to maximise the value of AI projects and also to align strategy, value creation and ESG objectives. with AI and industry 4.0 playing a key enablement role in this.

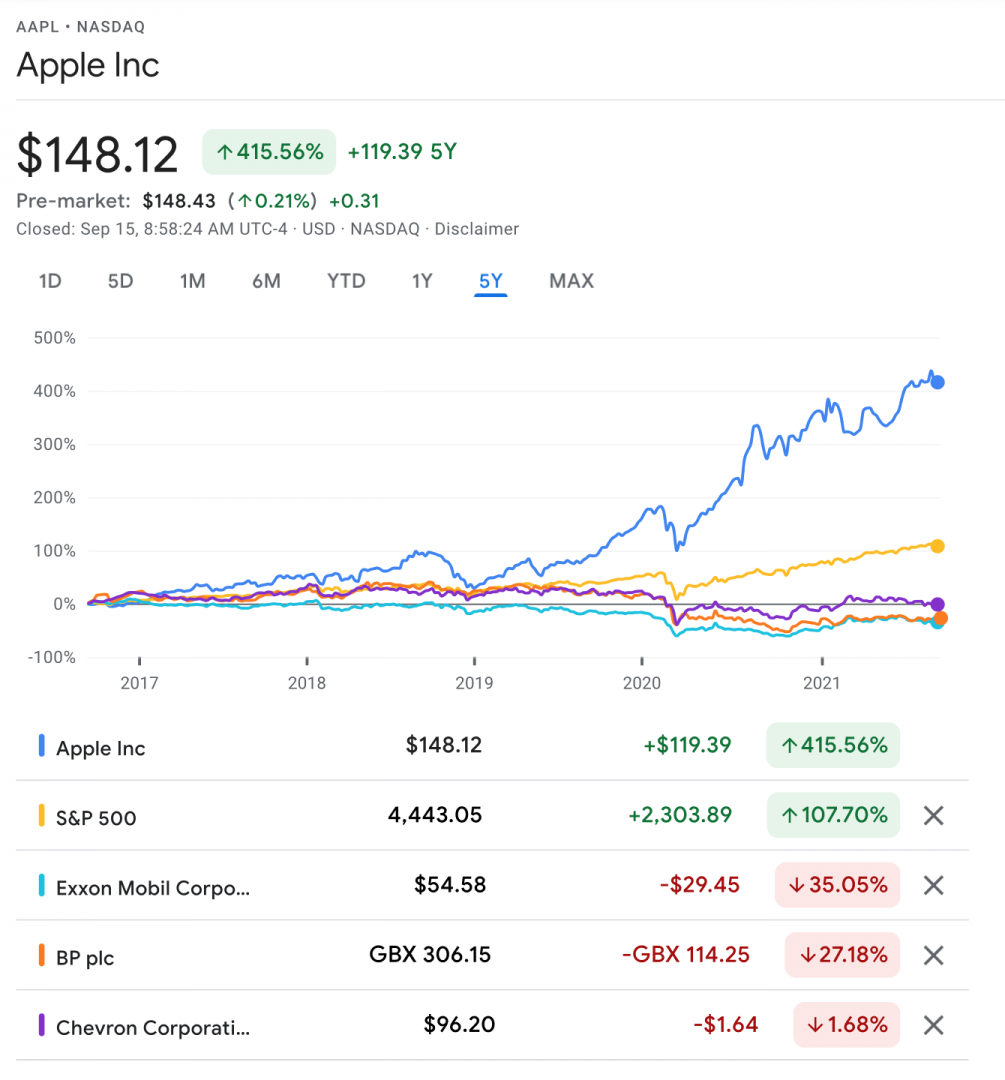

The firms who have successfully adopted AI have massively outperformed their underlying stock market index indicating the creation of economic and shareholder value. The challenge now is to create value through the rest of the economy as we still emerge from the economic and human challenges of the Covid crisis and also as we prepare to fight against climate change.

Apple is currently the most highly valued stock in the world with Microsoft not far behind it. Just over a decade ago Exxon Mobil held that crown and other oil firms were also dominant in their respective indices.

Source for image above: Google Finance

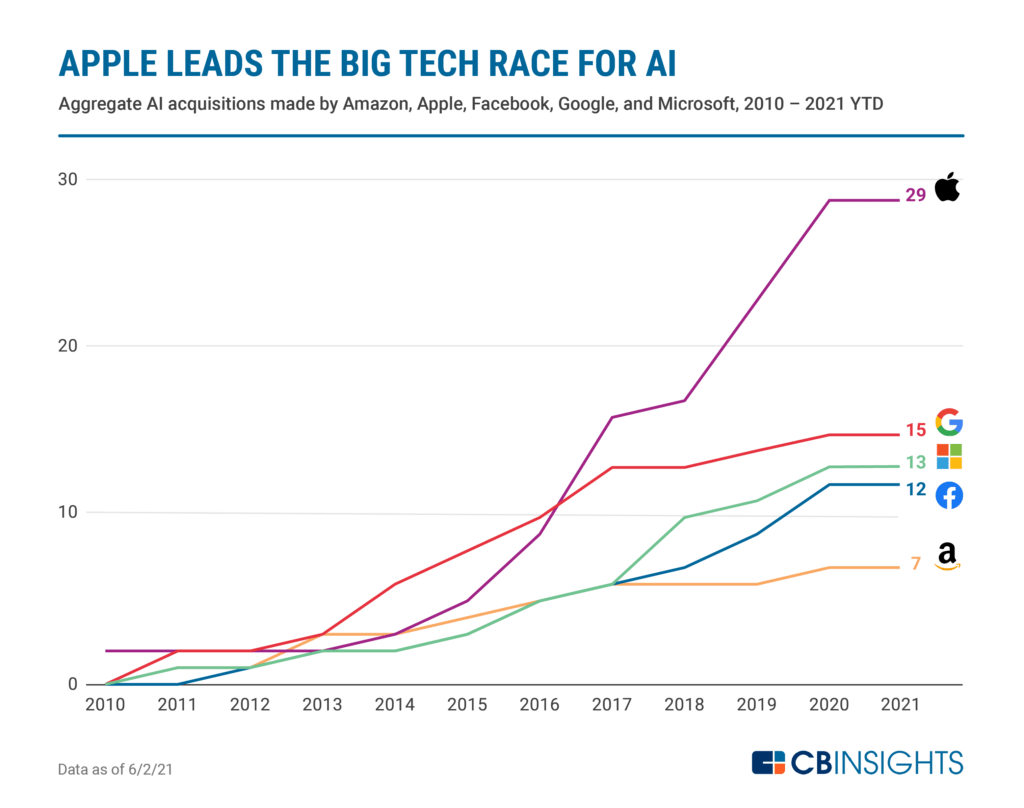

The rise of Apple as an icon of the tech sector marks a change with the old and from the old economy to the new digital economy. Apple has also acquired 29 AI startups since 2021, that is far more than other tech majors according to CBINSIGHTS.com, perhaps investment in AI does in fact pay off!

Source for image above: CBINSIGHTS Race for AI Which Tech Giants Are Snapping Up AI Startups